Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

The role of an administrator is crucial for managing IT systems, networks and digital platforms in an organization. An administrator has advanced permissions and responsibilities that allow them to control various aspects of the technical infrastructure and ensure that it is operated efficiently and securely. Here are some of the main responsibilities of an administrator:

User management: Administrators manage user accounts, access rights and permissions. They create new user accounts, assign them the necessary permissions and manage access control to ensure that only authorized users can access certain resources.

Security: Administrators are responsible for the security of IT systems to protect against data loss and unauthorized access.

Troubleshooting and support: The administrator is often the first point of contact for technical issues. They help users troubleshoot and resolve problems and ensure that the system is running smoothly.

In addition to these responsibilities, administrators are also tasked with managing sensitive settings and ensuring that systems meet compliance requirements and information security best practices. This includes managing sensitive data, configuring access controls and permissions, and monitoring and analyzing system logs to identify and address potential security risks.

Security is an essential aspect of any organization, especially when it comes to managing user accounts and access rights. Here are some best practices to maintain a secure user management protocol:

Regular password updates: Encourage users to update their passwords regularly to keep their accounts secure. Establish password complexity policies and require the use of strong passwords that include a combination of letters, numbers, and special characters.

Monitor administrator actions: Implement mechanisms to monitor administrator activities to detect suspicious or unusual activity. Log all administrator actions, including access to sensitive data or settings, to ensure accountability and identify potential security breaches.

Limit the number of administrators: Reduce the number of administrators to a minimum and grant administrative privileges only to those who really need them. By limiting the number of administrators, you minimize the risk of security breaches and make it easier to manage and monitor user accounts.

Zwei-Faktor-Authentifizierung (2FA): Implementieren Sie eine Zwei-Faktor-Authentifizierung für Administratorkonten, um die Sicherheit zusätzlich zu erhöhen. Dadurch wird ein zusätzlicher Sicherheitsschritt eingeführt, der sicherstellt, dass selbst bei Kompromittierung eines Kennworts ein Angreifer keinen unbefugten Zugriff auf das Konto erhält.

Regelmäßige Sicherheitsüberprüfungen: Führen Sie regelmäßige Sicherheitsüberprüfungen und Audits durch, um potenzielle Sicherheitslücken oder Schwachstellen zu identifizieren und zu beheben. Überprüfen Sie die Zugriffsrechte und Berechtigungen von Benutzerkonten, um sicherzustellen, dass sie den aktuellen Anforderungen und Best Practices entsprechen.

Training and awareness: Regularly train employees and administrators on security best practices and awareness of phishing attacks and other cyber threats. Make them aware of the importance of security and encourage them to report suspicious activity.

By implementing these best practices, organizations can improve the security of their user management protocol and minimize the risk of security breaches and data loss. It is important to view security as an ongoing process and make regular updates and adjustments to keep up with ever-changing threats and security requirements.

Groups and permissions allow administrators to control access to sensitive data and resources.

By assigning permissions at the group level, administrators can exercise granular control over who can access what information.

This helps prevent unauthorized access and data leaks.

The Least Privilege Principle states that users should only be given the permissions they need to perform their jobs.

By using groups, administrators can efficiently manage permissions and ensure that users can only access the resources relevant to their particular role or department.

Using groups makes it easier to manage user permissions in large organizations with many users and resources.

Instead of setting permissions individually for each user, administrators can assign permissions at the group level, simplifying administration and reducing administrative overhead.

Using groups and permissions allows organizations to monitor access activities and meet compliance requirements.

Logging access events allows administrators to perform audits to ensure that permissions are properly managed and that unauthorized access is not occurring.

Groups and permissions provide flexibility to adapt to changing needs and organizational structures.

Administrators can create groups based on departments, teams, projects, or other criteria and dynamically adjust permissions to ensure users always have access to the resources they need.

Overall, groups and permissions play a key role in increasing the security of IT infrastructure, improving operational efficiency, and ensuring compliance with policies and regulations. By managing groups and permissions wisely, organizations can effectively protect their data and resources while promoting employee productivity.

In a company with different departments such as finance, marketing, and human resources, employees in each department will need access to different types of documents and resources.

For example, the finance team needs access to financial reports and invoices, while HR needs access to employee data and payroll.

In a healthcare organization, different groups of employees will need different levels of access to patient data.

For example, doctors and nurses will need access to medical records and patient histories, while administrative staff may only need access to billing data and scheduling.

In an educational institution such as a university, different groups of users will need different levels of access to educational resources.

For example, professors will need access to course materials and grades, while administrators will need access to financial data and student information.

Companies may need to implement different levels of access to meet legal and compliance requirements.

For example, financial institutions may need to ensure that only authorized employees have access to sensitive financial data to ensure compliance with regulations such as the General Data Protection Regulation (GDPR).

In a project team, different members may require different levels of access to project materials.

For example, project managers may need access to all project resources, while external consultants may only have access to certain parts of the project.

In these scenarios, it is important that access levels are defined according to the roles and responsibilities of user groups to ensure the security of data while improving employee efficiency. By implementing role-based access control, organizations can ensure that users can only access the resources required for their respective function.

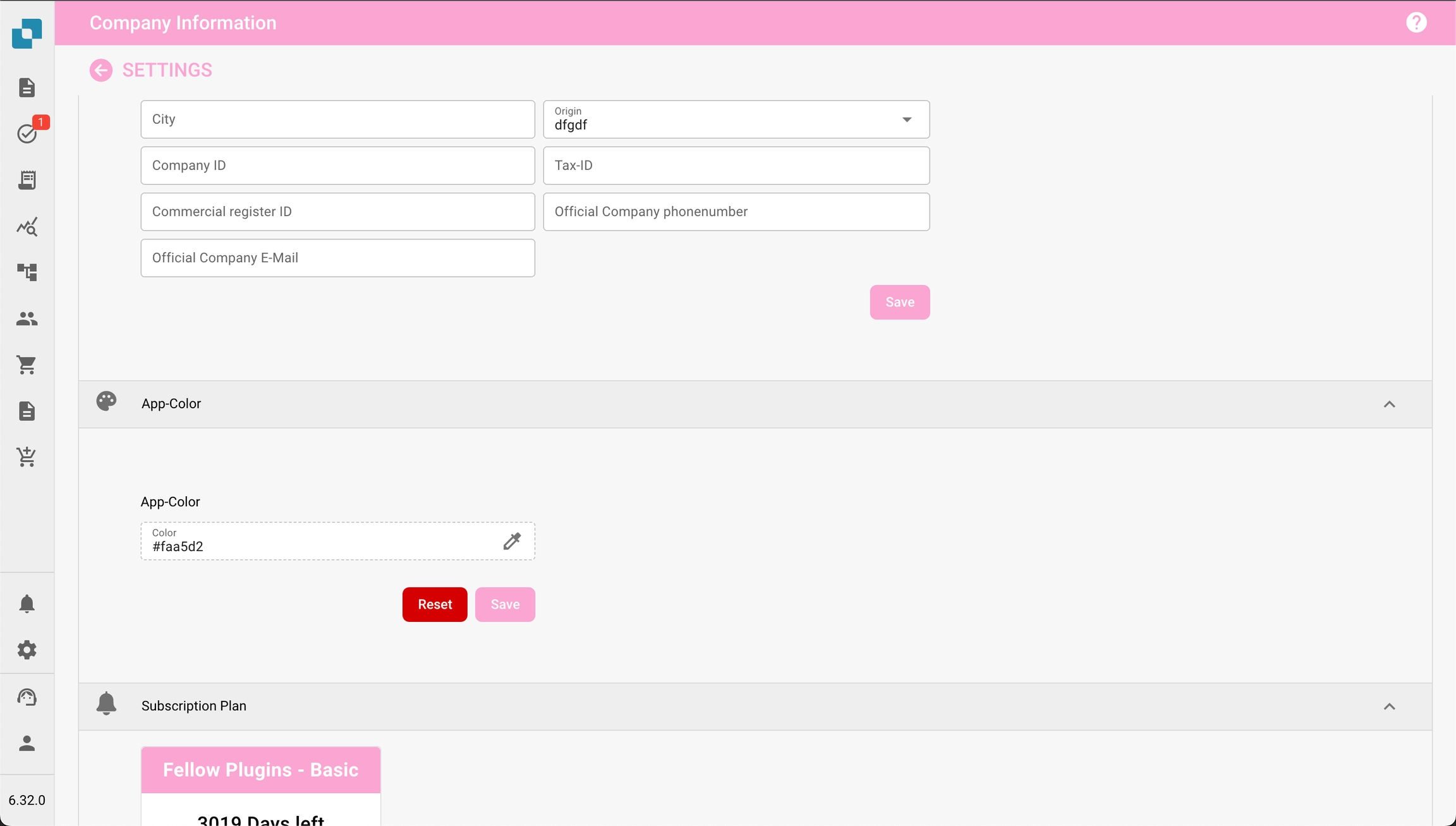

The App Color setting allows administrators to define the color scheme of the application interface. This feature is particularly useful for distinguishing between different environments such as testing, sandbox, and production. By assigning distinct colors to each environment, users can easily identify which environment they are working in, reducing the risk of performing critical actions in the wrong environment.

Navigate to Company Settings:

From the main menu, click on the Company Information section.

Locate the App Color Section:

Scroll down to the App Color section within the Company Information settings.

Choose a Color:

Click on the color box or enter a hex code directly into the text field.

A color picker will appear, allowing you to select the desired color.

You can enter a specific hex code if you have a predetermined color for the environment.

Save the Color:

Once you have selected the color, click on the Save button to apply the change.

The application interface will immediately update to reflect the new color.

Reset to Default:

If you wish to revert to the default color, click the Reset button.

To avoid confusion, it is recommended to establish a standard color scheme for each environment:

Production: Use a neutral or default color, such as #FFFFFF (white) or #f0f0f0 (light grey), to indicate the live environment.

Testing: Use a bright or alerting color, such as #ffcc00 (yellow) or #ffa500 (orange), to indicate a testing environment.

Sandbox: Use a distinct color, such as #007bff (blue) or #6c757d (grey), to indicate a sandbox or development environment.

Under the App Color section, administrators will also see information related to the Subscription Plan. This includes the current plan, its status, and the remaining days of subscription.

The App Color setting is a simple yet effective tool to help users quickly recognize the environment they are working in. By carefully selecting and managing these colors, organizations can minimize errors and improve workflow efficiency.

Gebruikersinstellingen is een gebied in een systeem waar gebruikers persoonlijke voorkeuren, accountinstellingen en beveiligingsinstellingen kunnen aanpassen. Typisch omvatten gebruikersinstellingen opties zoals wachtwoordwijzigingen, profielinformatie, meldingsvoorkeuren en mogelijk individuele machtigingen om toegang te krijgen tot bepaalde functies of gegevens.

In de meeste organisaties hebben alleen geautoriseerde personen toegang tot gebruikersinstellingen, meestal beheerders of systeembeheerders. Dit komt omdat de instellingen gevoelige informatie kunnen bevatten die de beveiliging van het systeem in gevaar kan brengen als deze door ongeautoriseerde personen worden gewijzigd. Beheerders kunnen gebruikersinstellingen beheren om ervoor te zorgen dat ze voldoen aan de beleidslijnen en vereisten van de organisatie en dat de integriteit van het systeem behouden blijft.

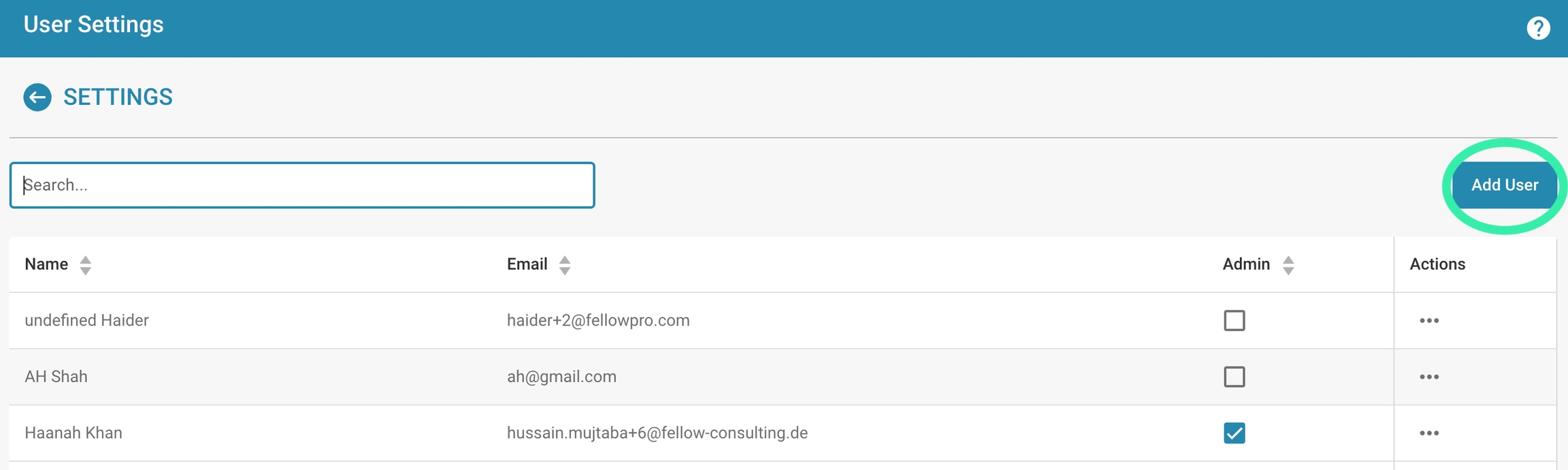

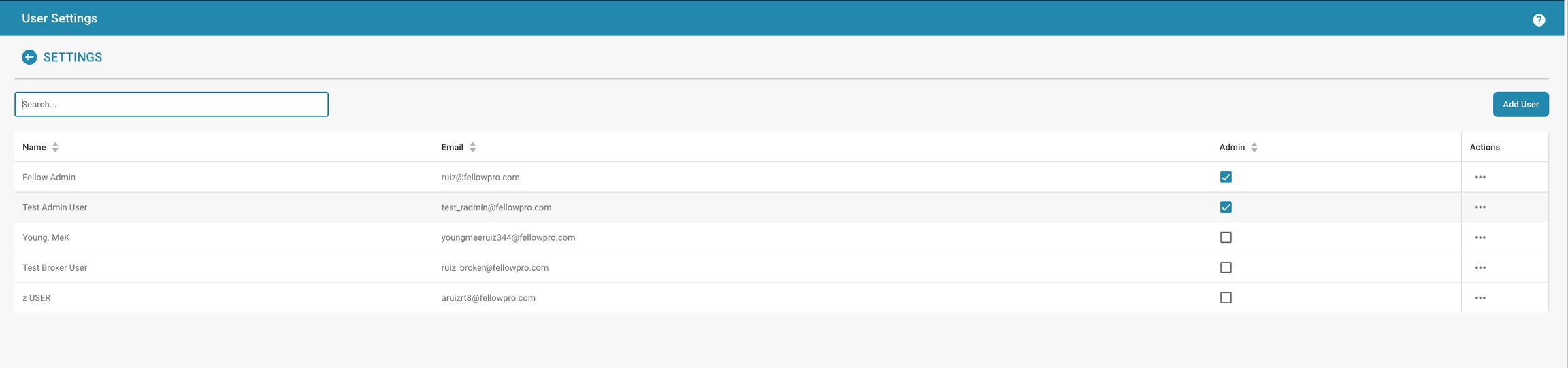

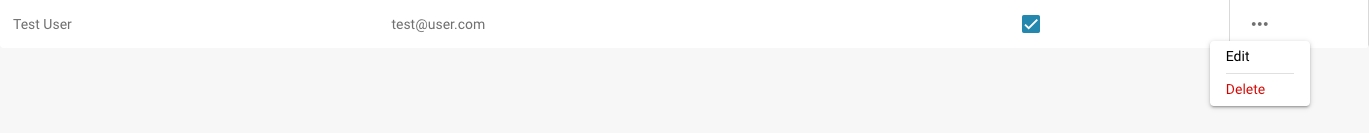

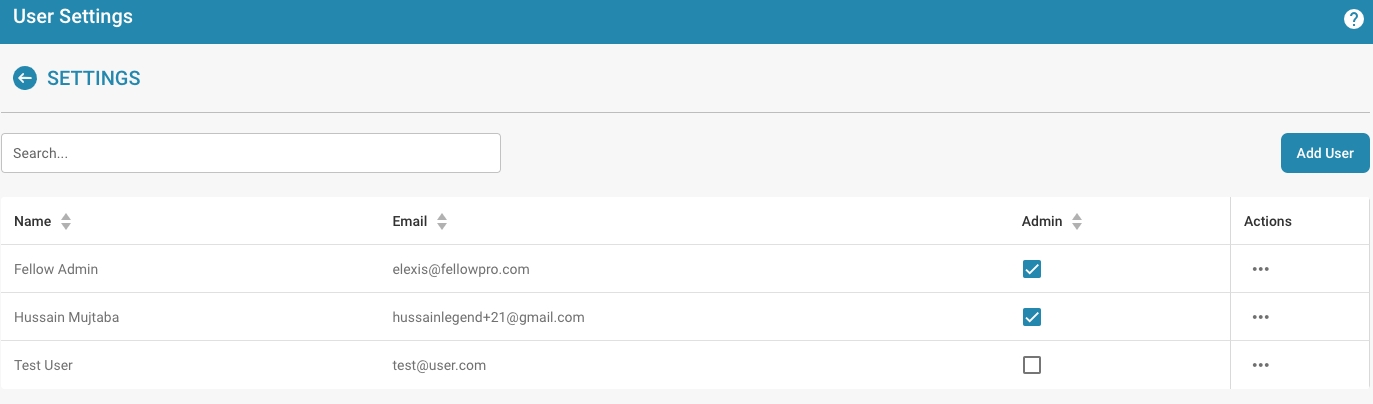

Zoekbalk: Hiermee kunnen beheerders snel gebruikers vinden door naar hun namen of andere details te zoeken.

Gebruikerslijst: Toont een lijst van gebruikers met de volgende kolommen:

Naam: De volledige naam van de gebruiker.

E-mail: Het e-mailadres van de gebruiker, dat waarschijnlijk wordt gebruikt als hun inlogidentificatie.

Beheerder: Een selectievakje dat aangeeft of de gebruiker administratieve bevoegdheden heeft. Beheerders hebben doorgaans toegang tot alle instellingen en kunnen andere gebruikersaccounts beheren.

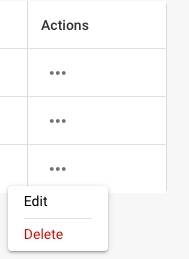

Acties: Deze kolom bevat doorgaans knoppen of links voor het uitvoeren van acties zoals het bewerken van gebruikersgegevens, het resetten van wachtwoorden of het verwijderen van het gebruikersaccount.

Gebruiker toevoegen-knop: Deze knop wordt gebruikt om nieuwe gebruikersaccounts aan te maken. Door erop te klikken verschijnt doorgaans een formulier waarin je de gegevens van de nieuwe gebruiker kunt invoeren, zoals hun naam, e-mail en of ze beheerdersrechten moeten hebben.

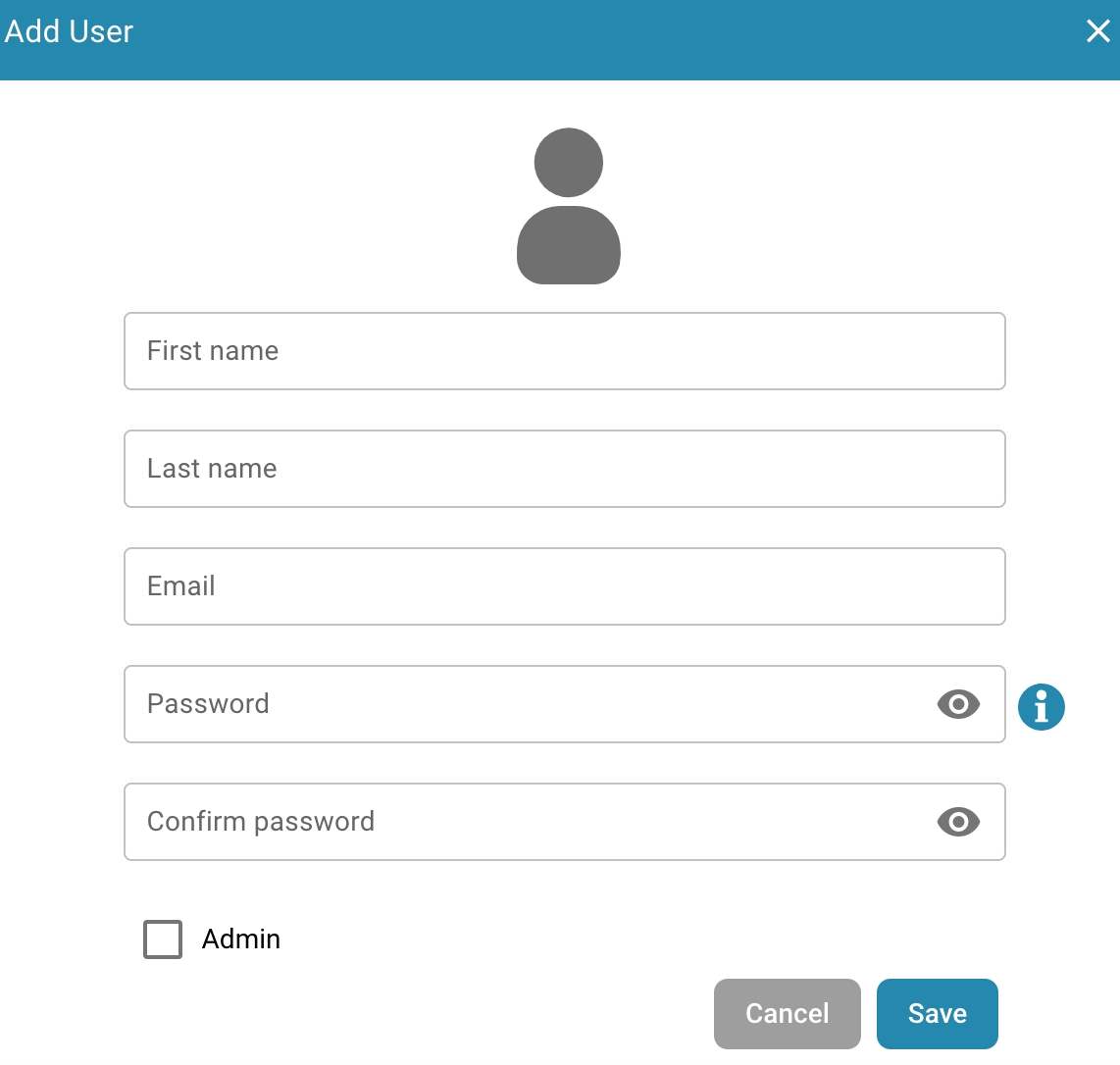

Toegang tot gebruikersbeheer: Navigeer naar Instellingen - Globale instellingen - Groepen, Gebruiker en Machtigingen - Gebruiker, waar je nieuwe gebruikers kunt toevoegen.

Nieuwe gebruiker toevoegen: Klik in de gebruikersinstellingen op “Voeg gebruiker toe”

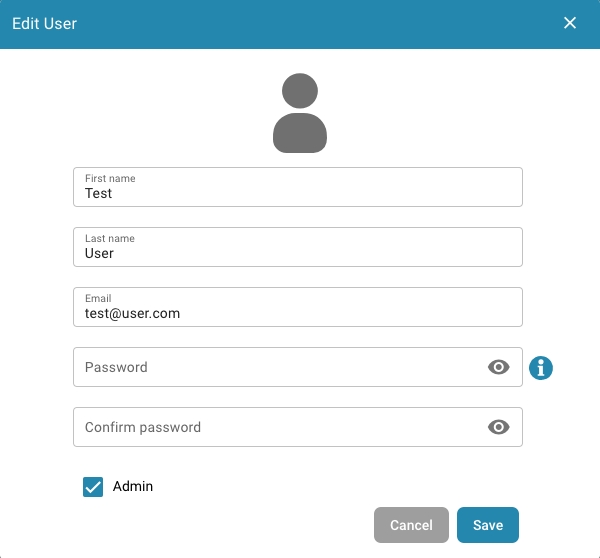

Vul het formulier in: Er verschijnt een formulier waarin je de informatie voor de nieuwe gebruiker kunt invoeren. Typische informatie omvat:

Gebruikersnaam: Unieke naam voor de gebruiker die wordt gebruikt om in te loggen.

Voornaam en Achternaam: Naam van de gebruiker.

E-mailadres: Het e-mailadres van de gebruiker dat wordt gebruikt voor communicatie en meldingen.

Wachtwoord: Een wachtwoord voor de gebruiker dat moet voldoen aan de beveiligingsbeleid.

Gebruikersrol: Stel de rol van de gebruiker in, bijv. Standaard gebruiker of beheerder.

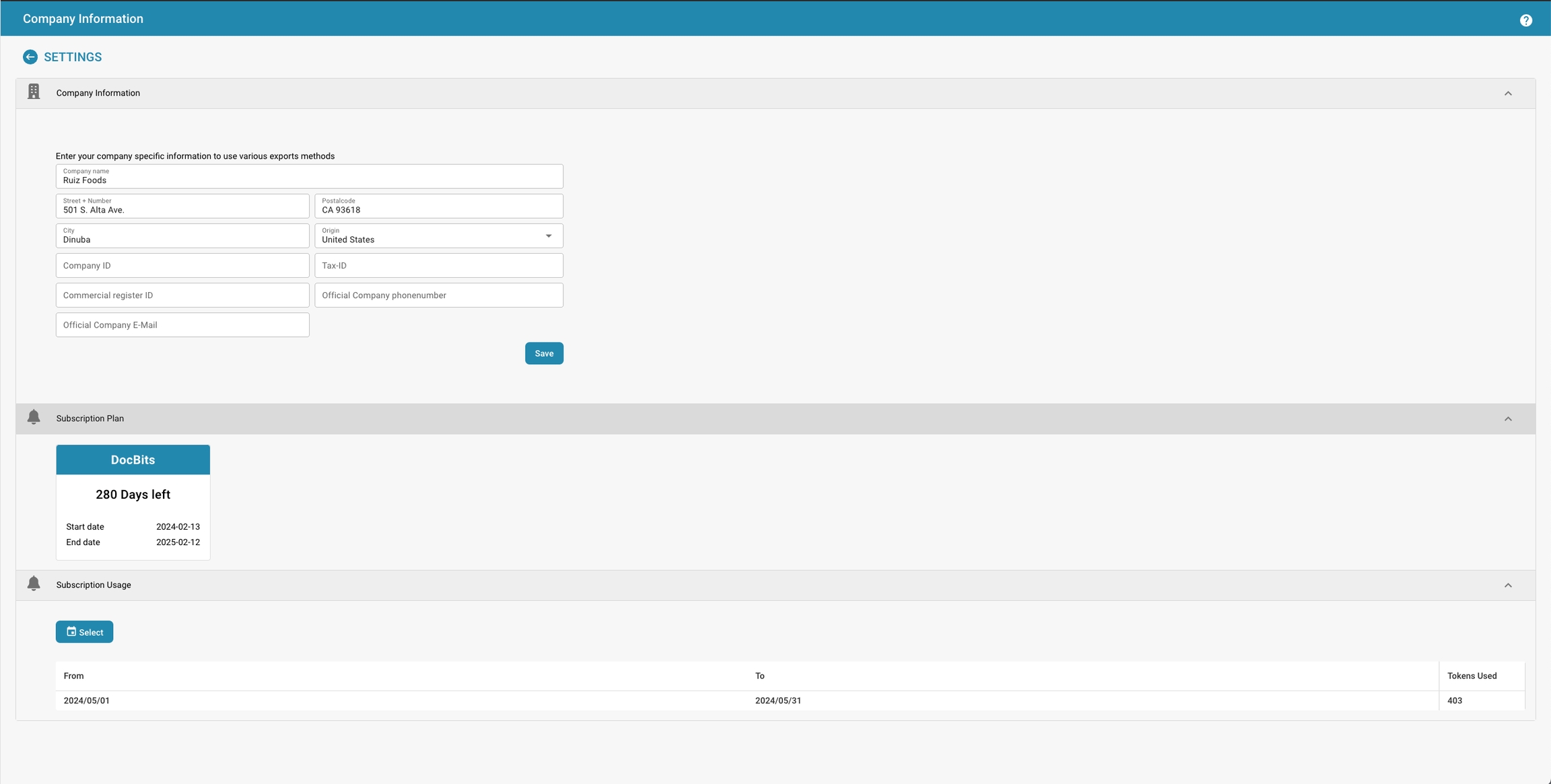

Bedrijfsnaam: De juridische naam van het bedrijf zoals geregistreerd.

Straat + Nummer: Het fysieke adres van het hoofdkantoor of de hoofdvestiging van het bedrijf.

Postcode: De ZIP- of postcode voor het adres van het bedrijf.

Stad: De stad waarin het bedrijf is gevestigd.

Staat: De staat of regio waar het bedrijf is gevestigd.

Land: Het land waar het bedrijf actief is.

Bedrijfs-ID: Een unieke identificatie voor het bedrijf, die intern of voor integraties met andere systemen kan worden gebruikt.

BTW-ID: Het belastingidentificatienummer voor het bedrijf, belangrijk voor financiële operaties en rapportage.

Handelsregister-ID: Het registratienummer van het bedrijf in het handelsregister, wat belangrijk kan zijn voor juridische en officiële documentatie.

Officieel Bedrijfstelefoonnummer: Het primaire contactnummer voor het bedrijf.

Officiële Bedrijfse-mail: Het belangrijkste e-mailadres dat zal worden gebruikt voor officiële communicatie.

De hier ingevoerde informatie kan cruciaal zijn voor het waarborgen dat documenten zoals facturen, officiële correspondentie en rapporten correct zijn opgemaakt met de juiste bedrijfsgegevens. Het helpt ook bij het handhaven van consistentie in de manier waarop het bedrijf wordt weergegeven in verschillende externe communicatie en documenten. Na het invoeren of bijwerken van de informatie moet de beheerder de wijzigingen opslaan door op de knop "Opslaan" te klikken om ervoor te zorgen dat alle aanpassingen systeemwijd worden toegepast.

Daarnaast biedt de sectie een overzicht van het abonnementsplan, met informatie over hoeveel dagen er nog over zijn, start- en einddata, en een abonnementsgebruikmeter die het verbruik van servicetokens bijhoudt ten opzichte van wat in het plan is toegewezen. Dit kan beheerders helpen bij het monitoren en plannen van de verlengingen of upgrades van het abonnement op basis van de gebruikstrends.

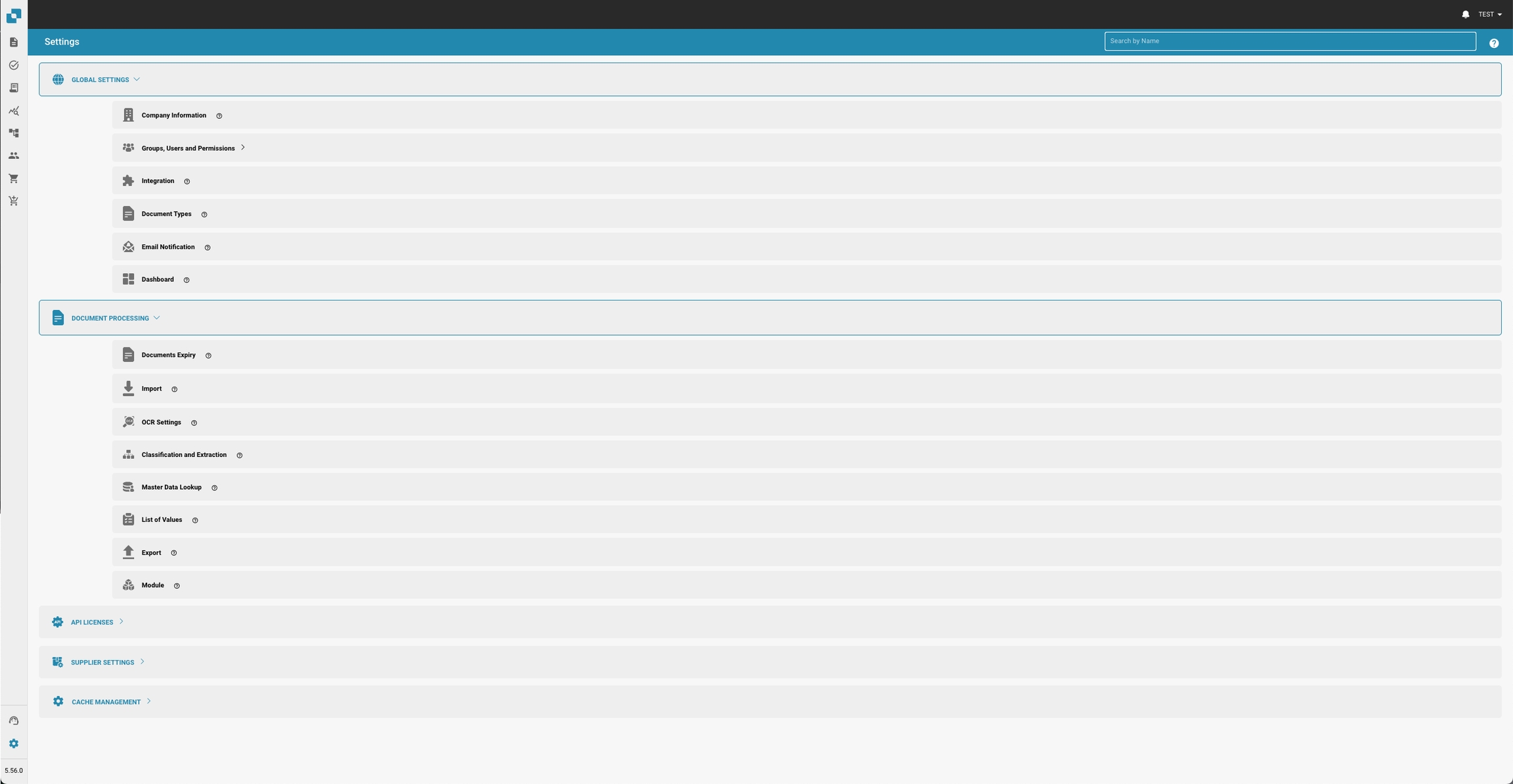

Globale Instellingen:

Bedrijfsinformatie: Definieer en bewerk basisgegevens over het bedrijf, zoals naam, adres en andere identificatoren.

Groepen, Gebruikers en Machtigingen: Beheer gebruikersrollen en machtigingen, zodat verschillende niveaus van toegang tot verschillende functies binnen DocBits mogelijk zijn.

Integratie: Stel integraties met andere software of systemen in, waardoor de functionaliteit van DocBits wordt verbeterd met externe diensten.

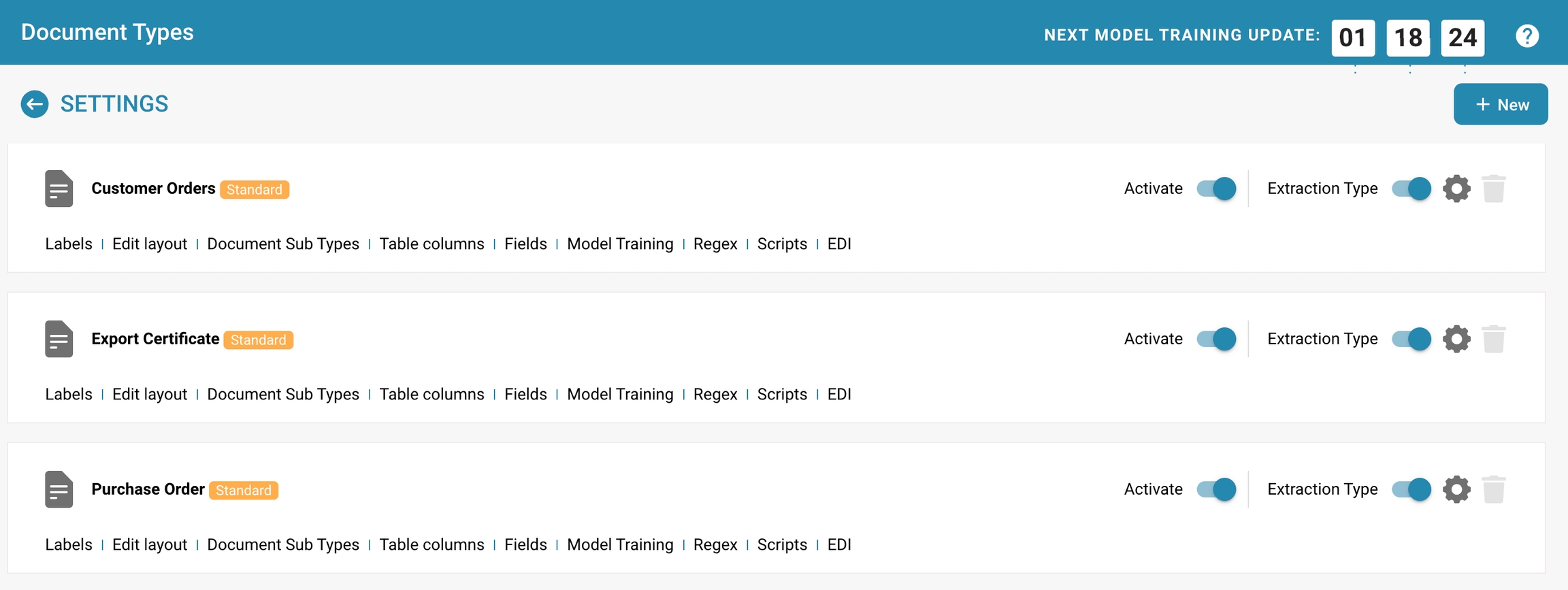

Documenttypes: Specificeer en beheer verschillende soorten documenten die DocBits zal verwerken, zoals facturen, bestellingen, enz.

E-mailnotificatie: Configureer instellingen voor e-mailwaarschuwingen en meldingen met betrekking tot documentverwerkingsactiviteiten.

Dashboard: Pas de dashboardweergave aan met widgets en statistieken die belangrijk zijn voor de gebruikers.

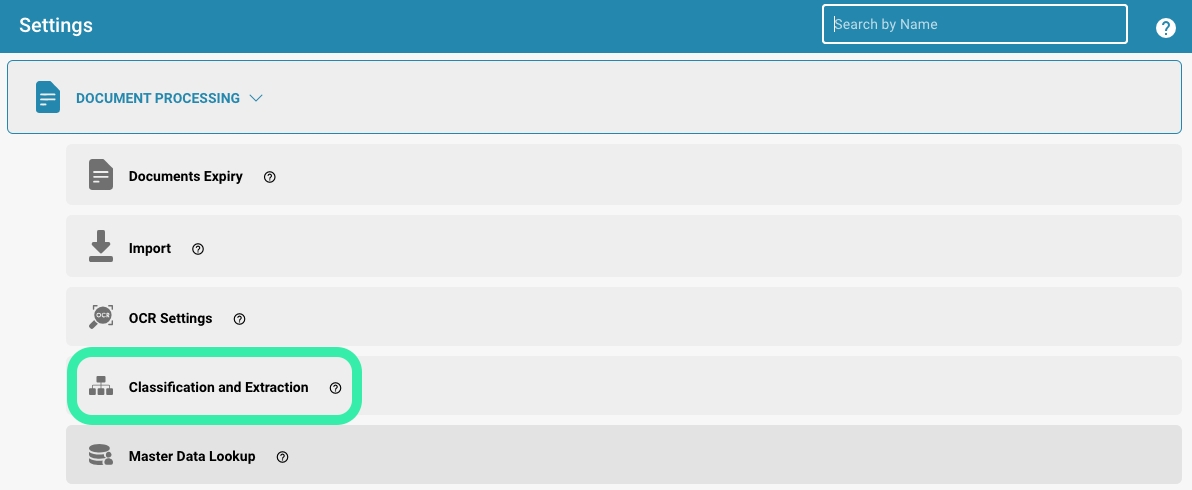

Documentverwerking:

Documentverval: Stel regels in voor hoe lang documenten worden bewaard voordat ze worden gearchiveerd of verwijderd.

Importeren: Configureer hoe documenten in DocBits worden geïmporteerd, inclusief broninstellingen en bestandstypen.

OCR-instellingen: Pas instellingen aan voor Optical Character Recognition (OCR) die afbeeldingen van tekst omzet in machine-gecodeerde tekst.

Classificatie en Extractie: Definieer hoe documenten worden gecategoriseerd en hoe gegevens uit documenten worden geëxtraheerd.

Masterdata Lookup: Stel opzoekingen in voor het valideren of aanvullen van geëxtraheerde gegevens met reeds bestaande masterdata.

Waardenlijst: Beheer vooraf gedefinieerde lijsten die worden gebruikt bij gegevensinvoer en validatie.

Exporteren: Configureer hoe en waar verwerkte documenten en gegevens worden geëxporteerd.

Module: Extra modules die kunnen worden geconfigureerd om de functionaliteit uit te breiden.

API-licenties: Beheer API-sleutels en monitor gebruiksstatistieken voor API's die door DocBits worden gebruikt.

Leverancierinstellingen: Configureer en beheer instellingen die specifiek zijn voor leveranciers, mogelijk geïntegreerd met leveranciersbeheersystemen.

Cachebeheer: Pas instellingen aan die verband houden met het cachen van gegevens om de prestaties van het systeem te verbeteren.

Logging in as an administrator: Log in with your administrator privileges.

Accessing user management: Navigate to user settings where you can edit existing users.

Selecting users: Find and select the user whose details you want to change. This can be done by clicking on the username or an edit button next to the user.

Editing user details: A form will appear containing the user’s current details. Edit the required fields according to the changes you want to make. Typical details to edit include:

First and last name

Email address

User role or permission level

Saving Changes: Review any changes you made and click Save to save the new user details.

Review the impact of role changes: If you changed the user's user role, review the impact of that change on security access levels. Make sure that after the role change, the user has the permissions required to continue performing their duties.

Send notification (optional): You can send a notification to the user to inform them of the changes made.

After completing these steps, the user details are successfully updated and the user has the new information and permissions according to the changes made.

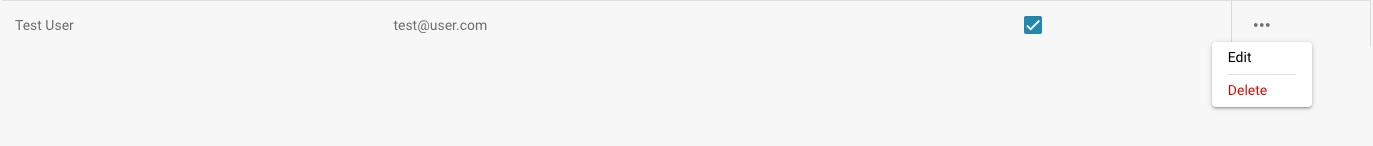

Selecting Users: Find and select the user whose access you want to remove. This can be done by clicking the user name or an edit button next to the user.

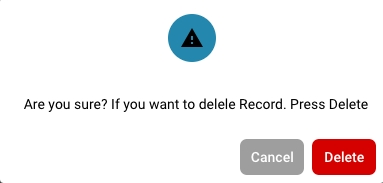

Removing Access: Click "delete" to remove the user.

Confirmation: You are asked to confirm the user’s removal.

Optional Notification: You can optionally send a notification to the user to inform them of the removal of their access.

Review Tasks and Documents: Before removing the user, review what tasks or documents are assigned to the user. Move or transfer responsibility for those tasks or documents to another user to make sure nothing gets lost or left unfinished.

Save Changes: Confirm the user’s removal and save the changes.

By following these steps, you can ensure that the user’s access is safely removed while properly managing all relevant tasks and documents.

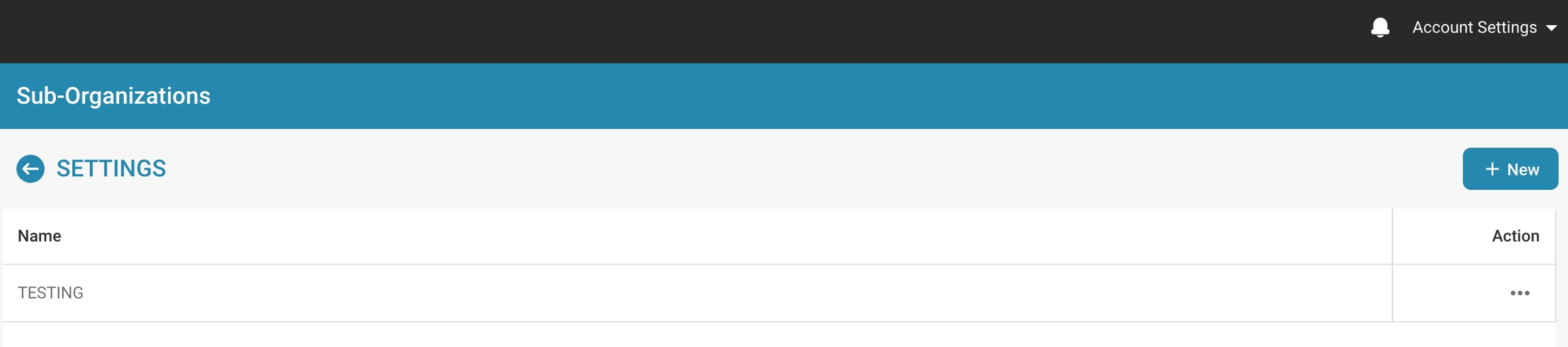

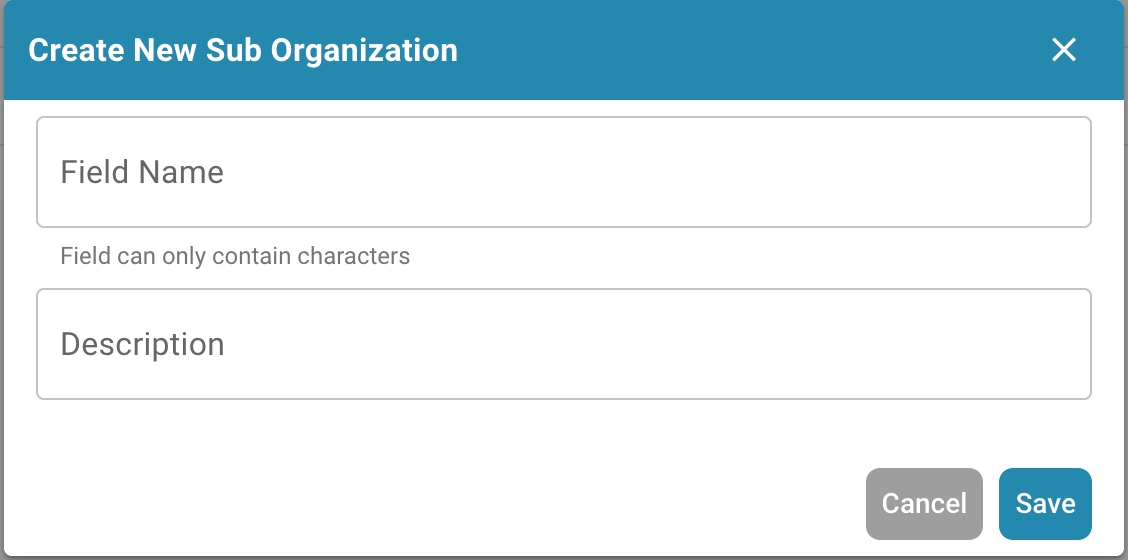

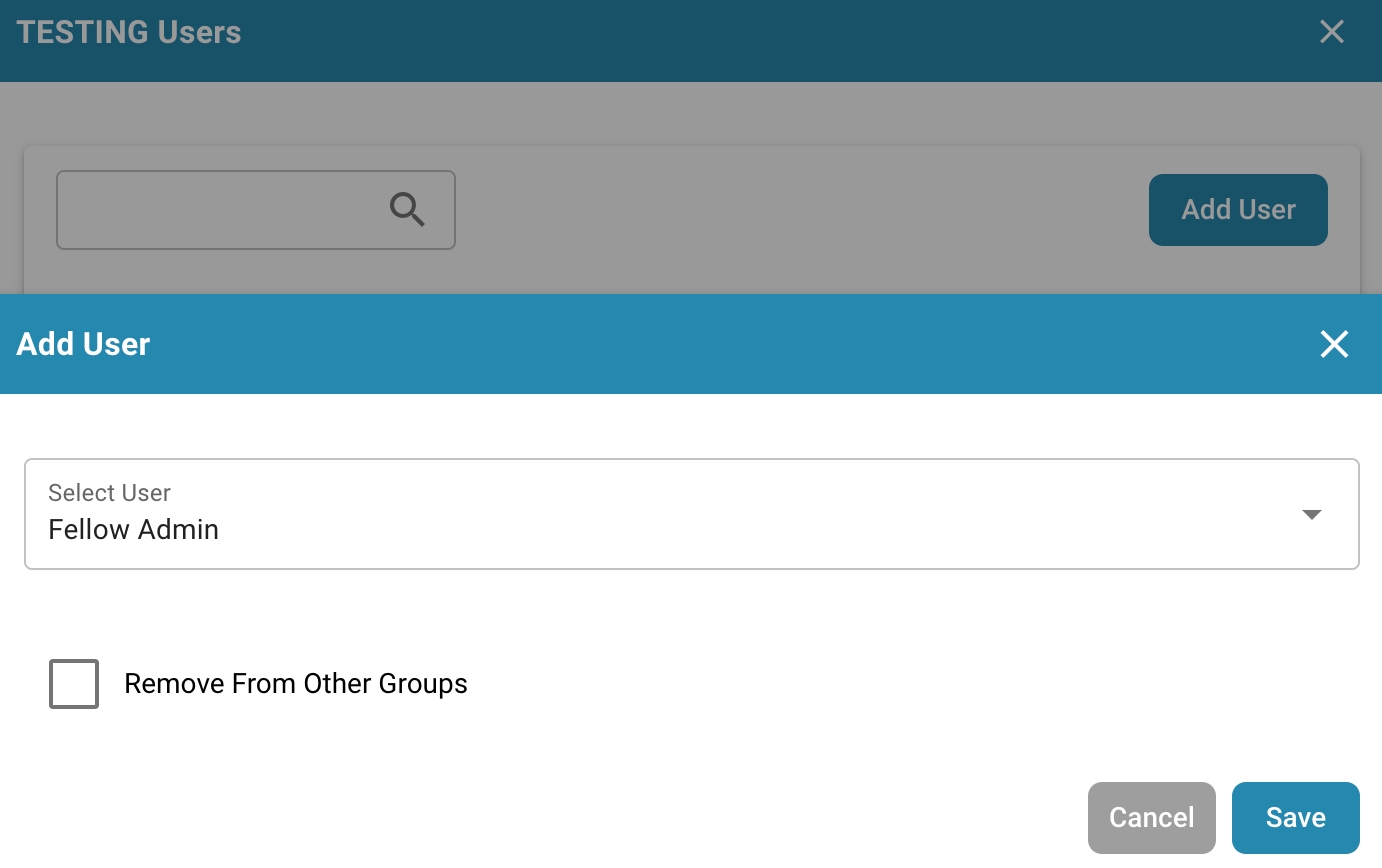

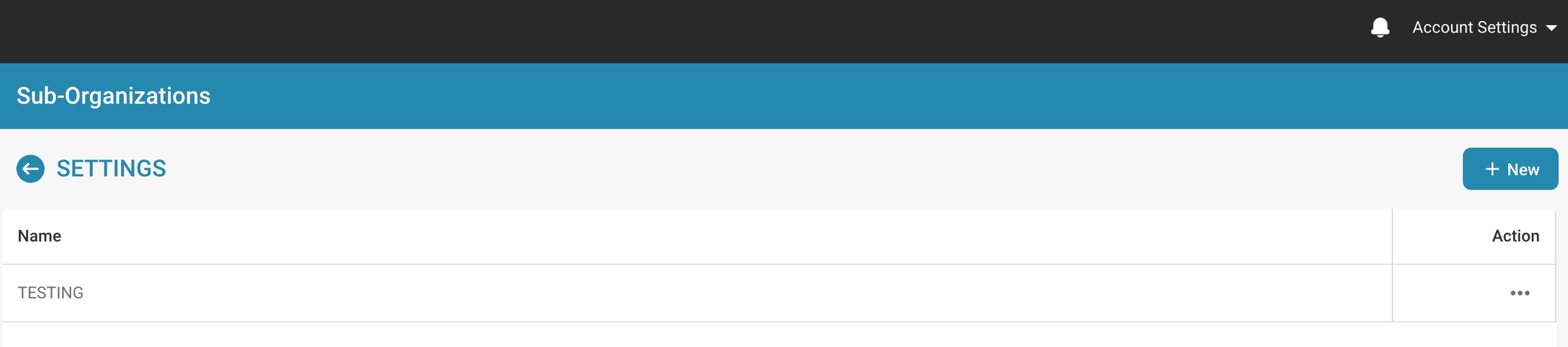

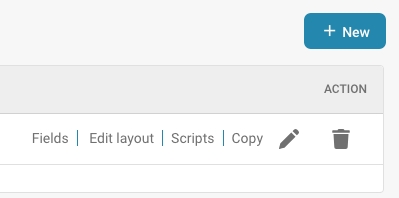

Click on the + NEW button

The following menu will be displayed:

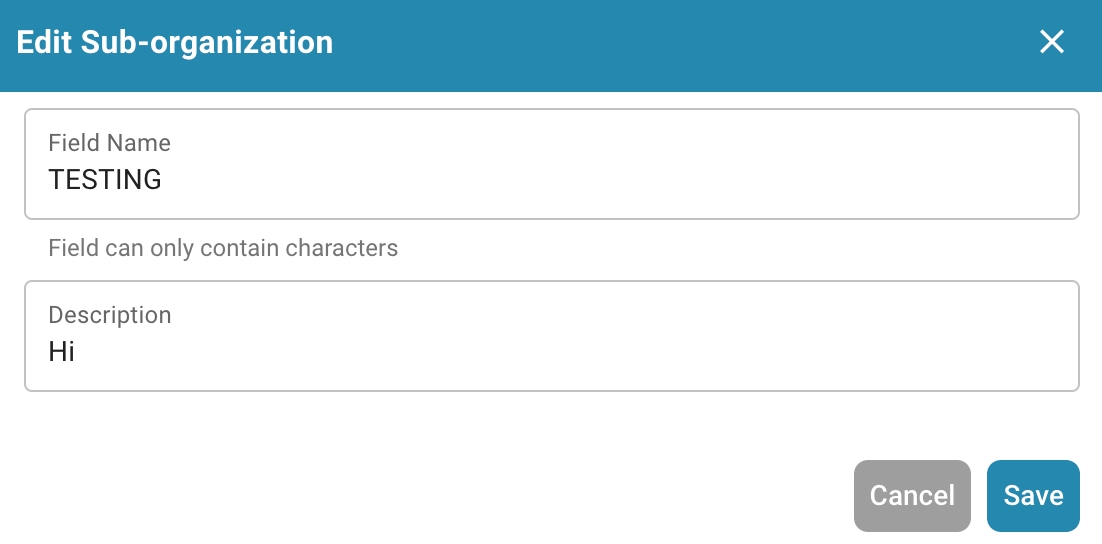

Enter the details of the sub-organization you want to create, the name and description, then click on the SAVE button. You should then find your newly created sub-organization at the bottom of the list of existing sub-organizations.

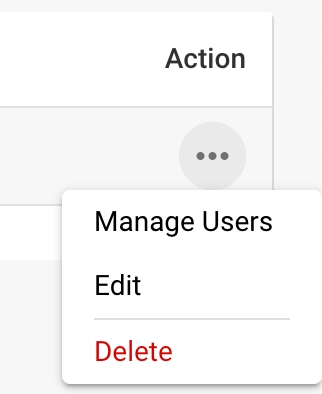

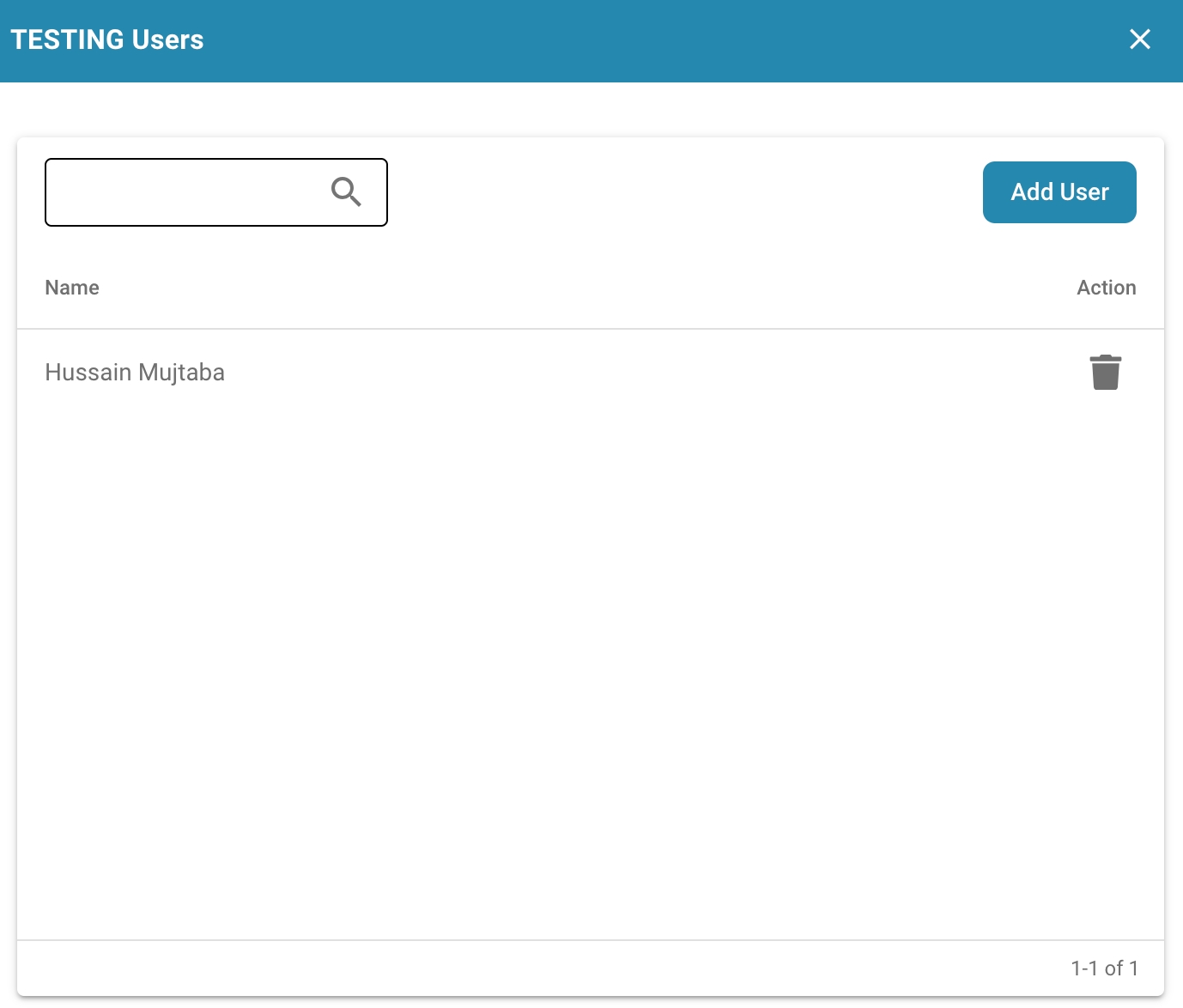

Manage Users:

Adding Users: Administrators can create new user accounts and assign them to the appropriate sub-organization.

Assigning Roles and Permissions: Administrators can set the roles and permissions for users within a suborganization. This typically involves assigning access rights to specific documents, folders or functions in the document management system.

Manage profile details: Administrators can edit profile details of users within the sub-organization, such as: B. Contact information or department affiliation. This allows for updated and accurate management of user data.

You can add a new user to the organization and have the option of whether to remove the user from other groups.

Edit User:

Editing suborganization settings: Administrators can edit the settings and properties of a suborganization, including its name, description, or hierarchy level within the system.

Edit user details: Administrators can edit the details of individual users within a sub-organization, for example to adjust their access rights or update their contact information.

Delete User:

Deleting sub-organizations: Administrators may also have the ability to delete sub-organizations if they are no longer needed or if a restructuring of the organizational structure is required. When deleting a suborganization, administrators must ensure that all users and data associated with it are handled properly.

These management features enable administrators to effectively manage and adapt the user accounts and organizational structures within a document management system to meet the company's changing needs and processes.

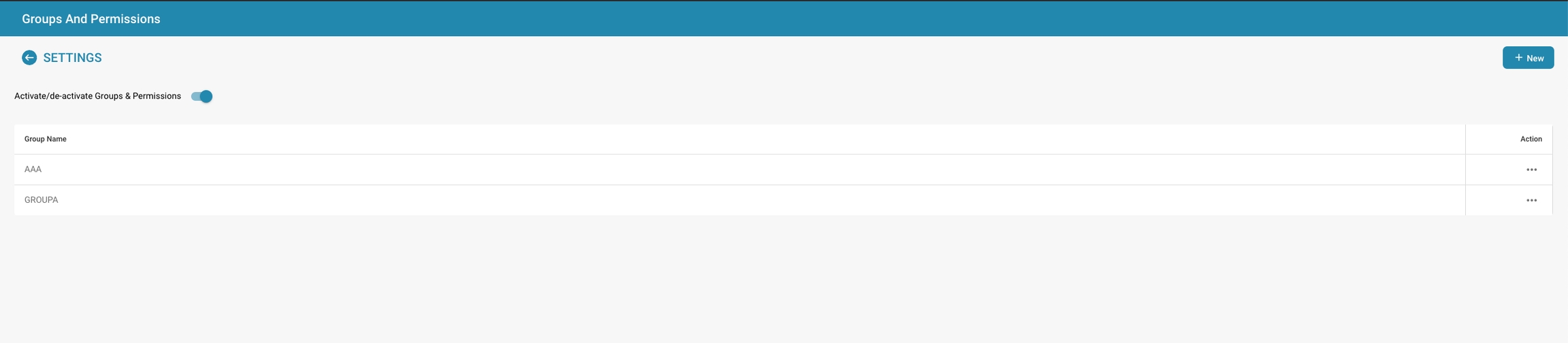

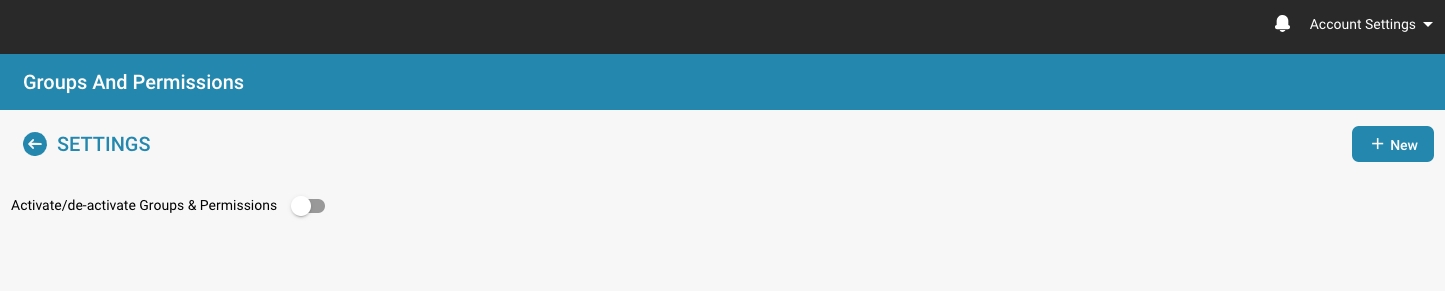

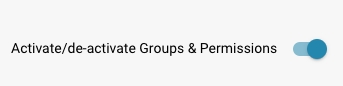

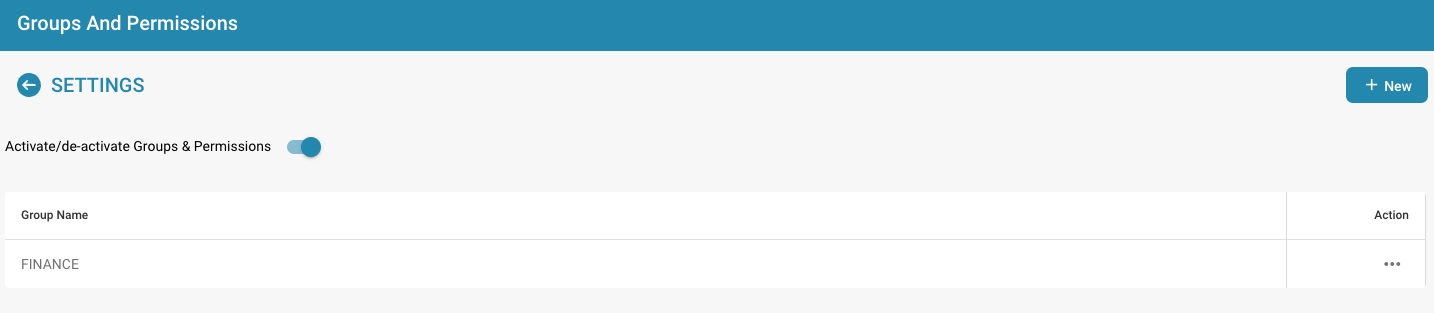

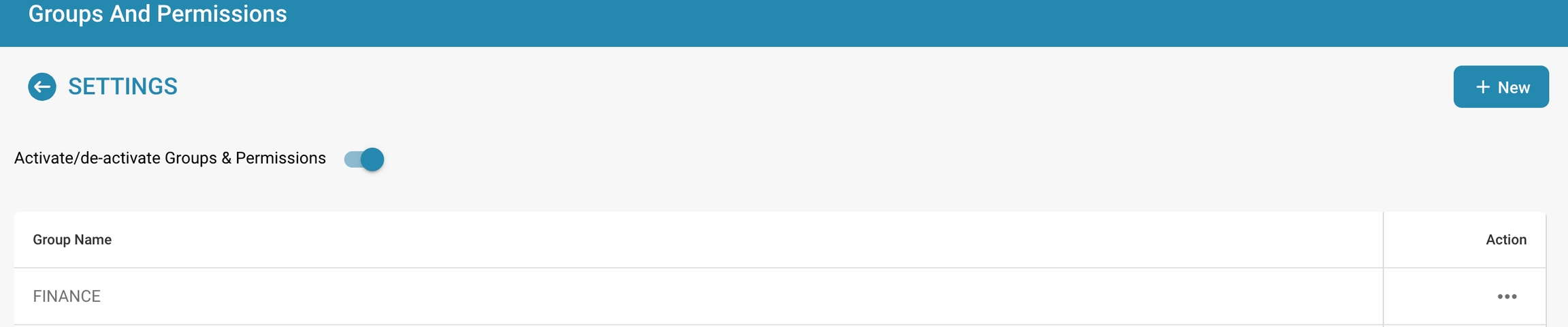

Groepen en Machtigingen Activeren/Deactiveren: Een schakelaar die de systeembeheerder in staat stelt om het gebruik van groepen en machtigingen op het platform in te schakelen of uit te schakelen. Wanneer deze is uitgeschakeld, kan het systeem standaard terugvallen op een minder gedetailleerd toegangscontrolesysteem.

Groepenlijst: Toont de lijst van beschikbare gebruikersgroepen binnen de organisatie. Elke groep kan worden geconfigureerd met specifieke machtigingen. Beheerders kunnen nieuwe groepen toevoegen door op de knop "+ Nieuw" te klikken.

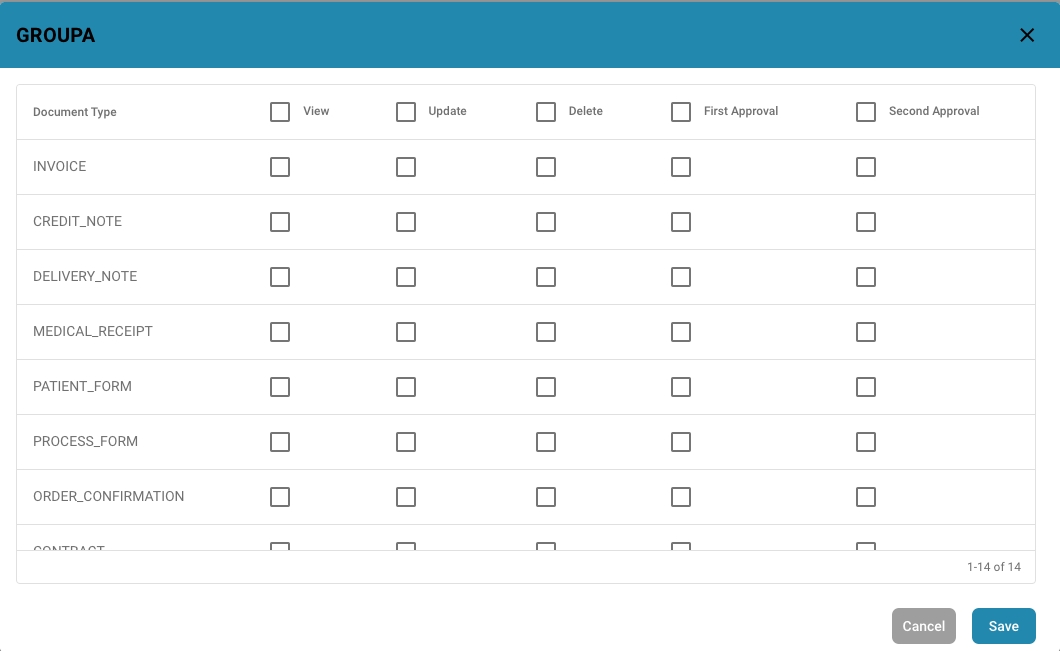

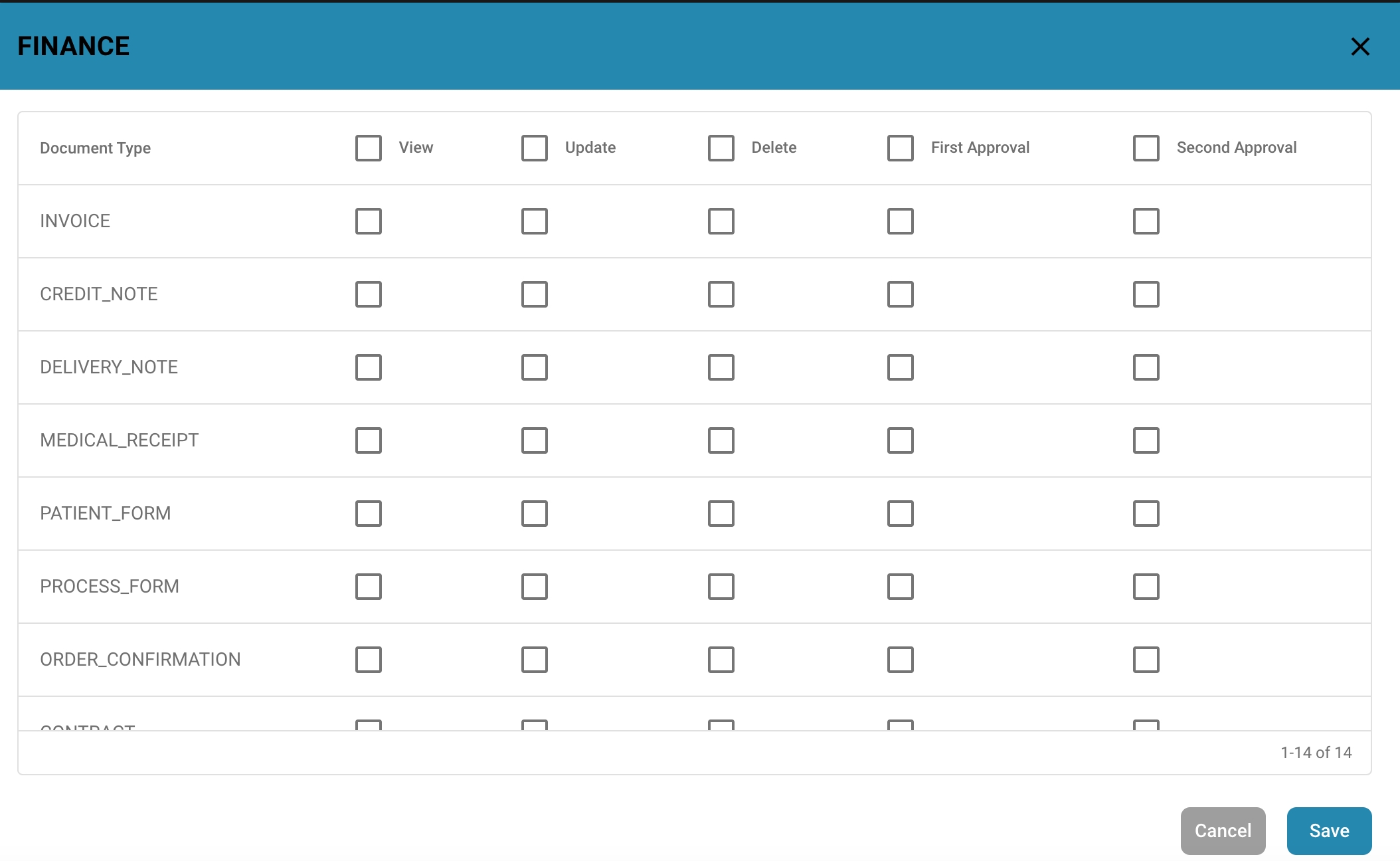

Machtigingentabel:

Wordt weergegeven zodra een groep is geselecteerd of een nieuwe groep wordt geconfigureerd.

Lijst alle documenttypes die door het systeem worden herkend (bijv. FACTUUR, KREDIETNOTA, LEVERINGSCONFIRMATIE).

Voor elk documenttype zijn er selectievakjes voor verschillende machtigingen:

Bekijken: Machtiging om het document te zien.

Bijwerken: Machtiging om het document te wijzigen.

Verwijderen: Machtiging om het document uit het systeem te verwijderen.

Eerste Goedkeuring: Machtiging om de initiële goedkeuring van het document uit te voeren.

Tweede Goedkeuring: Machtiging om een secundaire goedkeuring uit te voeren (indien van toepassing).

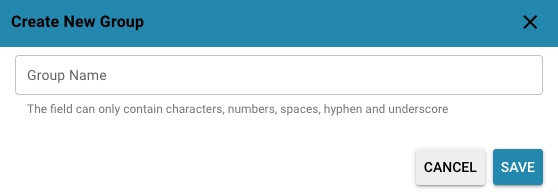

Navigate to Group Settings: Log in to your admin account and go to Group Settings in the admin panel.

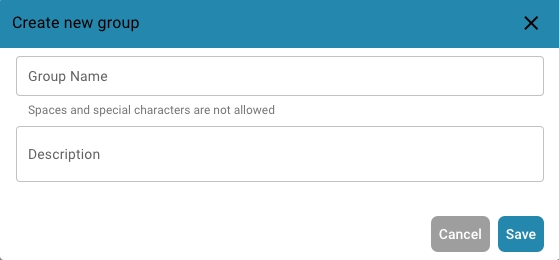

This window will open:

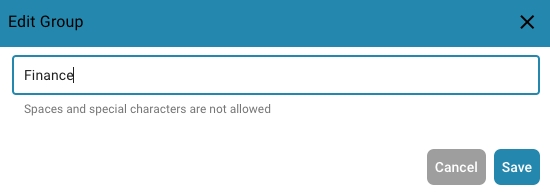

Click the + New button: If you want to add a new group, click the + New button to start the process of adding a new group.

Fill out the table: Provide the group name and a description of the group.

Save the details: Once you have filled in the group and description, click the "Save" button.

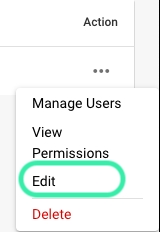

Edit Groups: To edit groups, click on "edit", here you can change the group name

Enable Groups & permissions: To make the group visible, "Groups & permissions" must be enabled.

Check the results: After saving, review the results to make sure the group was successfully added, edited, or updated.

Het creëren van suborganisaties binnen een documentbeheersysteem dient om de structuur en het beheer van gebruikersaccounts, documenten en workflows binnen een organisatie verder te organiseren en te differentiëren. Hier zijn enkele doeleinden en voordelen van het creëren van suborganisaties:

Structurering en organisatie: Suborganisaties maken het mogelijk om een hiërarchische structuur binnen het documentbeheersysteem te creëren. Dit kan helpen om gebruikersaccounts en documenten te organiseren op basis van afdeling, team, locatie of andere relevante criteria voor duidelijker en efficiënter beheer.

Beheer van rechten: Door suborganisaties te creëren, kunnen beheerders gedetailleerde rechten en toegangscontroles instellen voor verschillende groepen gebruikers. Dit betekent dat specifieke gebruikers of groepen alleen toegang hebben tot de documenten en middelen die relevant zijn voor hun respectieve suborganisatie, wat de beveiliging en privacy verbetert.

Workflows en samenwerking: Suborganisaties kunnen samenwerking en communicatie binnen specifieke teams of afdelingen vergemakkelijken door toegang tot gedeelde documenten, projecten of taken te centraliseren. Dit bevordert efficiëntie en coördinatie bij het samenwerken aan gemeenschappelijke projecten of workflows.

Rapportage en analyse: Door gebruikersaccounts en documenten in suborganisaties te organiseren, kunnen gedetailleerde rapporten en analyses worden gemaakt over de activiteiten en prestaties van individuele teams of afdelingen. Dit stelt beheerders en managers in staat om inzicht te krijgen in het gebruik van het documentbeheersysteem op organisatieniveau.

Schaalbaarheid en flexibiliteit: Suborganisaties bieden een schaalbare structuur die kan meegroeien met de groei en veranderingen van de organisatie. Nieuwe teams of afdelingen kunnen eenvoudig worden toegevoegd en op de juiste manier worden geïntegreerd in het bestaande suborganisatieschema zonder de algehele structuur van het documentbeheersysteem te beïnvloeden.

Over het algemeen stellen suborganisaties in staat tot een effectievere beheer en organisatie van gebruikersaccounts, documenten en workflows binnen een documentbeheersysteem door de structuur, beveiliging en samenwerking te verbeteren.

Ga naar Instellingen, Globale Instellingen → Groepen, Gebruikers en Rechten → Suborganisaties zoals hieronder weergegeven.

U wordt vervolgens naar een pagina geleid die er ongeveer zo uitziet:

Hier vindt u uw eerder gemaakte suborganisaties, evenals waar u nieuwe suborganisaties kunt creëren.

De integratie-instellingen spelen een cruciale rol in de efficiëntie en functionaliteit van Docbits, omdat ze zorgen voor een naadloze interactie met andere tools en diensten. Hier zijn enkele redenen waarom het belangrijk is om de integratie-instellingen goed te configureren:

De integratie-instellingen spelen een cruciale rol in de efficiëntie en functionaliteit van DocBits, omdat ze zorgen voor een naadloze interactie met andere tools en diensten. Hier zijn enkele redenen waarom het belangrijk is om de integratie-instellingen goed te configureren:

Verhoogde efficiëntie: Integratie met andere tools en diensten kan workflows stroomlijnen en de efficiëntie verhogen. Bijvoorbeeld, documenten kunnen automatisch worden uitgewisseld tussen Docbits en een CRM-systeem, waardoor handmatige invoer wordt verminderd en de productiviteit toeneemt.

Gegevensconsistentie: Integratie maakt het mogelijk om gegevens naadloos uit te wisselen tussen verschillende systemen, wat de gegevensconsistentie en nauwkeurigheid verbetert. Dit voorkomt inconsistenties of dubbele gegevensinvoer die tot fouten kunnen leiden.

Realtime updates: Integratie maakt realtime updates mogelijk tussen verschillende platforms, zodat gebruikers altijd de meest actuele informatie hebben. Dit is vooral belangrijk voor kritieke bedrijfsprocessen die realtime informatie vereisen.

Taakautomatisering: Integratie maakt het mogelijk om routinetaken te automatiseren, wat tijd en middelen bespaart. Bijvoorbeeld, meldingen kunnen automatisch worden geactiveerd wanneer een bepaalde gebeurtenis zich in een ander systeem voordoet, zonder dat handmatige tussenkomst nodig is.

Verbeterde gebruikerservaring: Een goed geconfigureerde integratie zorgt voor een naadloze gebruikerservaring, aangezien gebruikers niet tussen verschillende systemen hoeven te schakelen om relevante informatie te verkrijgen. Dit verbetert de gebruikers tevredenheid en draagt bij aan de efficiëntie.

Om de integratie-instellingen goed te configureren, is het belangrijk om de vereisten van de organisatie te begrijpen en ervoor te zorgen dat de integratie naadloos wordt geïntegreerd in bestaande workflows en processen. Dit vereist grondige planning, configuratie en monitoring van de integratie om ervoor te zorgen dat deze soepel werkt en de gewenste waarde levert.

Check the permission settings for the documents or resources in question to ensure that users have the necessary permissions.

Make sure that users have access either directly or through group membership.

Check that the affected users are actually members of the groups that have been granted access.

Make sure that users have not been accidentally removed from relevant groups.

Check that individual permissions have been set at the user level that could override group permissions.

Make sure that these individual permissions are configured correctly.

Make sure that permissions are inherited correctly and are not blocked by parent folders or other settings.

Check permission history or logs to see if there have been any recent changes to permissions that could be causing the current issues.

Try accessing the affected documents with a different user account to see if the issue is user-specific or affects all users.

Make sure users are getting accurate error messages indicating permission issues. This can help you pinpoint and diagnose the problem more accurately.

If all other solutions fail, try reconfiguring permissions for the affected users or groups and ensure that all required permissions are granted correctly.

By following these troubleshooting tips, you can identify and resolve permission-related issues to ensure that users have the required access rights and can work effectively.

First check which type of M3 your organization is currently using, V1 or V2.

Best practices for configuring and maintaining integration settings help ensure the efficiency, security, and reliability of the integration between DocBits and your Identity Service Provider (IdP).

Here are some best practices:

Regularly review settings: Perform regular reviews of integration settings to ensure all configurations are correct and up-to-date. Changes to systems or policies may require updates to the integration.

Certificate and metadata updates: Monitor SAML certificate and metadata expiration dates and update them in a timely manner to avoid service disruptions. Use automated processes or reminders to ensure no expiration dates are missed.

Security-conscious credential management: Treat credentials such as API keys or certificates with the utmost confidentiality and protect them from unauthorized access. Use secure methods for storing and exchanging credentials to ensure the integrity of the integration.

Documentation and logging of changes: Record and log all changes to integration settings in detailed documentation. This allows you to track changes and revert to previous configurations when needed.

Training administrators: Ensure that the administrators responsible for configuring and maintaining integration settings have the necessary knowledge and skills. Provide training and resources to ensure they understand and can implement integration best practices.

Setting up alerts and notifications: Configure alerts and notifications for critical events such as certificate expiration dates or failed authentication attempts. This will allow you to identify potential issues early and proactively address them.

By following these best practices, you can ensure that the integration between DocBits and your identity service provider works smoothly, is secure, and meets the needs of your organization.

Het in- of uitschakelen van het machtigingssysteem met de schakelaar heeft verschillende effecten op de functionaliteit in DocBits.

Wanneer het machtigingssysteem is ingeschakeld, worden de toegangsrechten voor gebruikers en groepen toegepast.

Gebruikers krijgen alleen toegang tot de bronnen waarvoor ze expliciet zijn geautoriseerd op basis van de toegewezen machtigingen.

Beheerders kunnen de machtigingen voor individuele gebruikers en groepen beheren en ervoor zorgen dat alleen geautoriseerde personen de gegevens kunnen bekijken of bewerken.

Wanneer het machtigingssysteem is uitgeschakeld, worden alle toegangsrechten verwijderd en hebben gebruikers doorgaans onbeperkte toegang tot alle bronnen.

Dit kan nuttig zijn wanneer open samenwerking tijdelijk vereist is zonder de beperkingen van toegangscontrole.

Er kan echter een verhoogd risico zijn op gegevenslekken of ongeautoriseerde toegang, aangezien gebruikers mogelijk toegang hebben tot gevoelige informatie waarvoor ze niet zijn geautoriseerd.

Het in- of uitschakelen van het machtigingssysteem is een belangrijke beslissing op basis van de beveiligingseisen en de manier waarop de organisatie opereert. In omgevingen waar privacy en toegangscontrole cruciaal zijn, is het gebruikelijk om het machtigingssysteem ingeschakeld te laten om de integriteit en vertrouwelijkheid van gegevens te waarborgen. In andere gevallen kan het tijdelijk noodzakelijk zijn om het machtigingssysteem uit te schakelen om samenwerking te vergemakkelijken, maar dit moet met voorzichtigheid worden gedaan om mogelijke beveiligingsrisico's te minimaliseren.

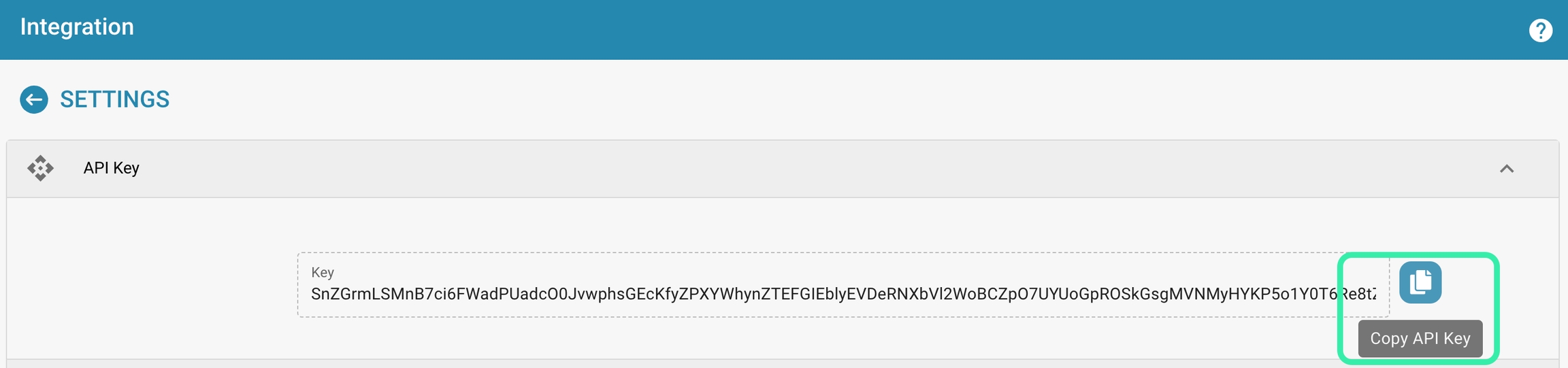

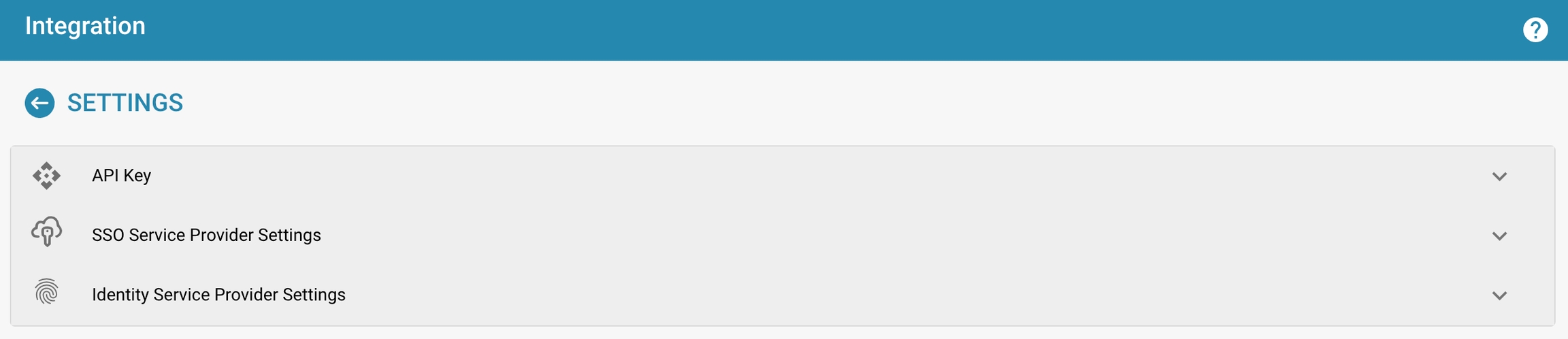

Key: This is the unique identifier used by external applications to access DocBits' API. It is crucial for authenticating requests made to DocBits from other software.

Actions such as view, regenerate, or copy the API key can be performed here, depending on the specific needs and security protocols.

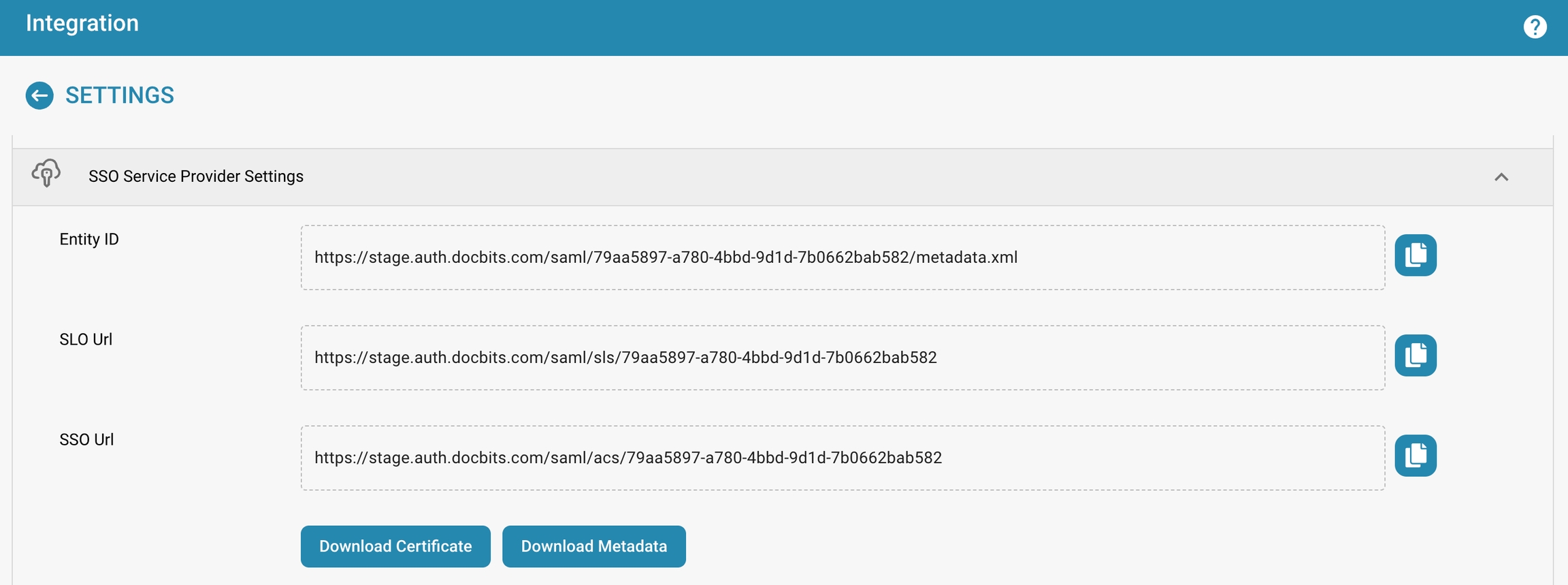

Entity ID: This is the identifier for DocBits as a service provider in the SSO configuration. It uniquely identifies DocBits within the SSO framework.

SLO (Single Logout) URL: The URL to which SSO sessions are sent to log out simultaneously from all applications connected via SSO.

SSO URL: The URL used for initiating the single sign-on process.

Actions such as "Download Certificate" and "Download Metadata" are available for obtaining necessary security certificates and metadata information used in setting up and maintaining SSO integration.

See Setup SSO

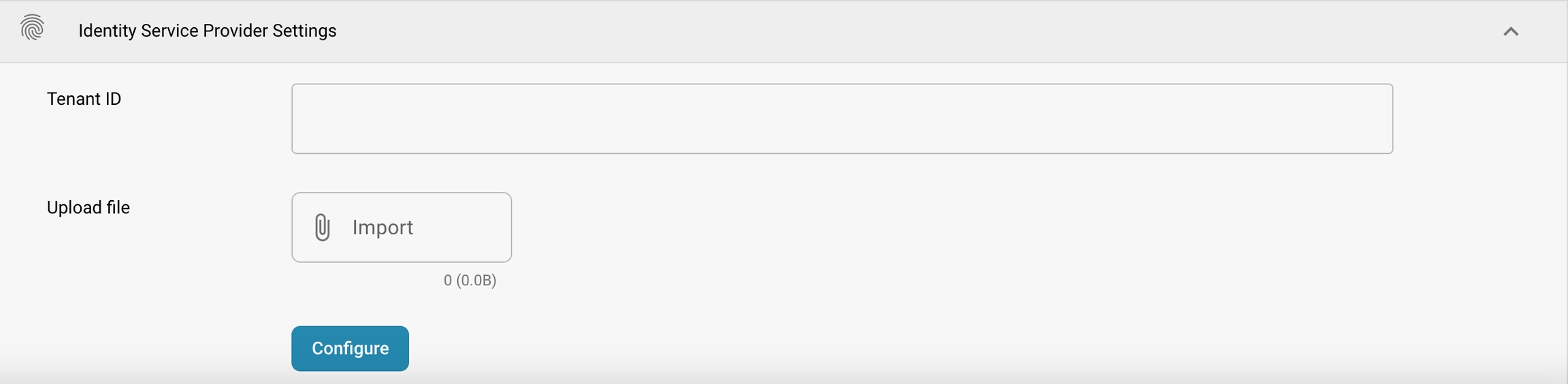

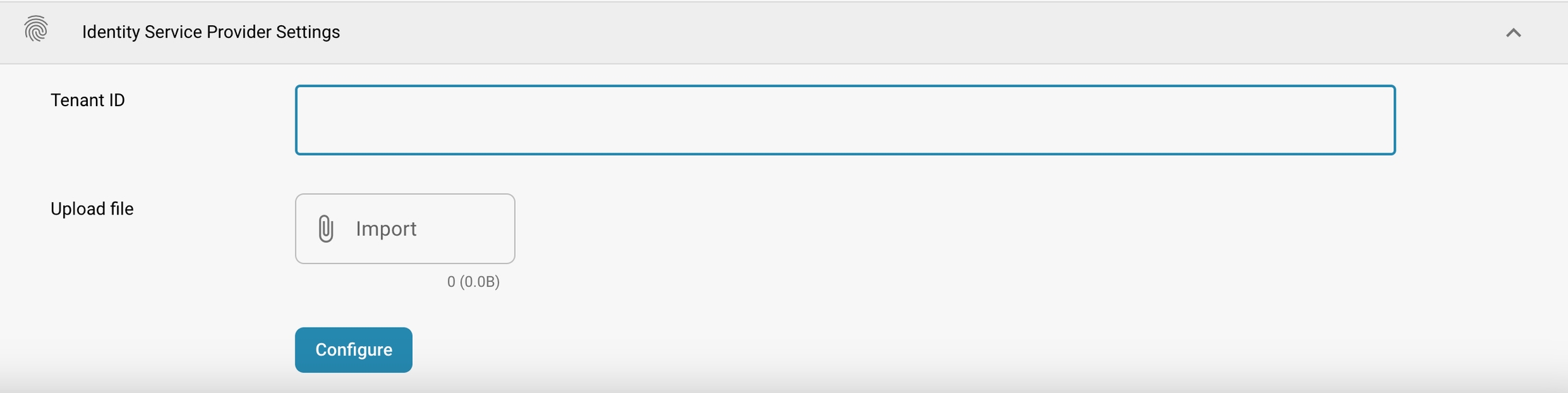

Tenant ID: This might be used when DocBits integrates with cloud services that require a tenant identifier to manage data and access configurations specific to the company using Docbits.

Upload file: Allows the admin to upload configuration files or other necessary files that facilitate integration with an identity provider.

Configure: A button to apply or update the settings after making changes or uploading new configurations.

Deze gids legt uit hoe beheerders toegangscontrole-instellingen kunnen definiëren voor verschillende gebruikersgroepen in DocBits. Elke groep kan worden geconfigureerd met aangepaste machtigingen op document- en veldniveau.

Het toegangscontrolepaneel stelt de beheerder in staat om gebruikersgroepen en hun respectieve machtigingen te beheren. Elke groep kan specifieke configuraties hebben met betrekking tot:

Documenttoegang: Of de groep toegang heeft tot een documenttype.

Veldniveau-machtigingen: Of de groep bepaalde velden binnen een document kan lezen, schrijven of bekijken.

Actiemachtigingen: Welke acties de groep kan uitvoeren, zoals bewerken, verwijderen, massaal bijwerken en goedkeuren van documenten.

Navigeer naar Instellingen.

Selecteer Documentverwerking.

Selecteer Module.

Activeer Toegangscontrole door de schuifregelaar in te schakelen.

Navigeer naar Instellingen.

Navigeer Algemene Instellingen.

Selecteer Groepen, Gebruikers en Machtigingen.

Selecteer Groepen en rechten.

Om machtigingen voor een groep, zoals PROCUREMENT_DIRECTOR, te beheren, klik op de drie stippen aan de rechterkant van het scherm.

Selecteer Toegangscontrole Weergeven.

Overzicht van Toegangscontrole:

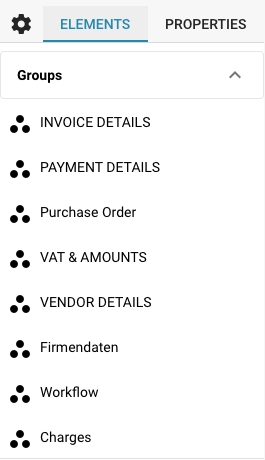

In deze sectie kunt u de toegang voor alle documenttypes inschakelen of uitschakelen, zoals Invoice, Credit Note, Purchase Order, en meer.

U kunt toegangslevels definiëren zoals:

Toegang: Geeft toegang tot het documenttype.

Lijst: Bepaalt of het documenttype zichtbaar is in de lijstweergave.

Weergave: Specificeert de standaardweergave voor het document.

Bewerken: Geeft toestemming om het document te bewerken.

Verwijderen: Staat de groep toe documenten te verwijderen.

Massa-update: Maakt massa-update van het documenttype mogelijk.

Goedkeuringsniveaus: Stelt het vermogen van de groep in om documenten goed te keuren (Eerste en Tweede goedkeuring).

Document ontgrendelen: Bepaalt of de groep een document kan ontgrendelen voor verdere bewerkingen.

Voorbeeldinstellingen voor PROCUREMENT_DIRECTOR:

Invoice: Ingeschakeld voor alle machtigingen, inclusief bewerken en verwijderen.

Purchase Order: Ingeschakeld met normale machtigingen voor alle acties.

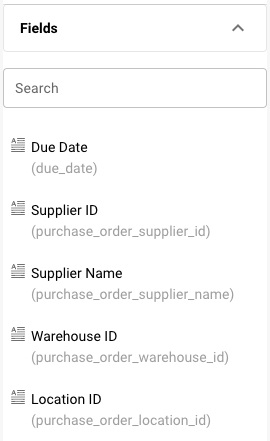

Veldniveau-machtigingen:

Binnen elk documenttype kunnen specifieke velden worden geconfigureerd met verschillende niveaus van machtigingen.

Machtigingen omvatten:

Lezen/Schrijven: Gebruikers kunnen zowel lezen als schrijven naar het veld.

Lezen/Eigenaar Schrijven: Alleen de eigenaar van het document of veld kan schrijven, anderen kunnen lezen.

Alleen Lezen: Gebruikers kunnen het veld alleen bekijken maar niet wijzigen.

Eigenaar Lezen/ Eigenaar Schrijven: Alleen de eigenaar van het document of veld kan schrijven en lezen.

Goedkeuring: Wijzigingen moeten worden goedgekeurd door bevoegde gebruikers of de beheerder.

Geen: Er zijn geen specifieke machtigingen van toepassing op het veld.

In document processing, APIs can be used to automate various tasks such as extracting text from documents, analyzing document contents, converting between different file formats, and more. Here are some examples of APIs in document processing:

OCR (Optical Character Recognition) API: This type of API allows you to extract text from images or scanned documents. For example:

NLP (Natural Language Processing) API: This API enables analysis of text content in documents, including keyword identification, entity recognition, sentiment analysis, etc. For example:

Conversion API: This type of API allows conversion between different file formats, for example from PDF to Word or from Word to PDF. For example:

Document Management API: This API allows you to upload, download and manage documents in a document management system. For example:

These examples show how APIs can be used in document processing to automate various tasks and improve efficiency. The exact functionality and syntax of the API depends on the particular API and its specific features.

Instructions for viewing, copying or regenerating the API key

API key management is an important aspect when it comes to the security of integrations and access to external services through APIs. Here are some steps to manage API keys and best practices for their security:

View and copy the API key:

Navigate to the API key settings in your DocBits account. Here you can find the API key, click "Copy" to copy the key.

Handling API keys with security in mind:

Treat API keys like sensitive credentials and never share them with anyone. Store API keys securely and use encryption if you need to store them locally. Update API keys regularly to ensure security and minimize the risk of unauthorized access. Avoid using API keys in public repositories or unsecured environments as they could potentially be intercepted by attackers.

Limit API key permissions:

Give API keys only the permissions required for the specific integration or service. Avoid excessive permissions to minimize the risk of abuse. Regularly review API key permissions and remove unnecessary permissions when they are no longer needed.

Logging and monitoring API calls:

Implement logging and monitoring of API calls to detect suspicious activity or unusual patterns that could indicate potential security breaches. Respond quickly to suspicious activity and, if necessary, revoke affected API keys to minimize the risk of further damage.

By carefully managing and securing API keys, organizations can ensure that their integrations and access to external services via APIs are protected and the risk of unauthorized access is minimized.

Configuring Single Sign-On (SSO) in DocBits requires a few steps to set up and configure. Here is a step-by-step guide:

Accessing SSO settings:

Log in to your DocBits account as an administrator.

Navigate to settings and look for Single Sign-On or SSO.

Configuring SSO parameters:

Enter the required SSO parameters such as the Entity ID, Single Log-Out (SLO) URL, and Single Sign-On (SSO) URL.

The Entity ID is a unique identifier for your service or application.

The SLO URL is the URL used for Single Log-Out to log users out of all services when needed.

The SSO URL is the URL that will redirect users to the Identity Provider for authentication.

Download certificates and metadata:

The identity provider (IdP) typically provides a certificate that DocBits uses to verify the SAML authentication response.

Download the certificate and store it securely.

The metadata download contains all the necessary configuration information for SSO integration. This includes information such as the entity ID, SSO URL, certificate information, and more.

Download the metadata and store it locally or provide it to the identity provider.

Identity provider (IdP) configuration:

Log in to the identity provider and configure the application or service for SAML integration.

Use the downloaded metadata or the manually entered SSO parameters to add DocBits as a trusted application or service.

Make sure the IdP's configuration matches the SSO parameters specified in DocBits.

Testing SSO integration:

After the configuration is complete, perform a test of the SSO integration to ensure that users can successfully log in to DocBits using SSO.

Also, verify that Single Log-Out is working properly by logging out of DocBits and ensuring that you are logged out of other connected services as well.

Setting up SSO properly allows users to seamlessly log in to Docbits using their existing credentials, improving the user experience and increasing security.

Configuring the Identity Service Provider (IdP) to integrate with DocBits requires a few specific steps.Here is a guide to doing that:

Accessing the IdP configuration interface

Log in to your Identity Service Provider (IdP) as an administrator.

Navigate to the settings or configuration interface dedicated to managing SAML integrations.

Entering the Tenant ID:

Look for the section that allows configuration for new SAML integrations.

Enter the DocBits tenant ID. This ID identifies your Docbits account to the IdP and enables secure communication between the two systems.

Importing the required files:

DocBits usually requires downloading metadata or adding specific configuration details. Check your IdP's documentation to see what steps are required.

Download the DocBits metadata file or import it into your IdP's configuration menu. Alternatively, you can manually enter the required configuration details, depending on what your IdP supports.

Configure integration settings:

Make sure the integration settings, such as the SSO URL, Entity ID, and SAML certificate, are correct.

Check that the Single Log-Out (SLO) URL and other required parameters are configured correctly. These are critical for smooth authentication and logout via SAML.

Verify configuration:

Take time to make sure all information entered is correct and that there are no typos or misconfigurations.

Run tests to ensure that users can successfully log into Docbits via SAML and that Single Log-Out is working properly.

Security considerations:

Make sure all transferred files and configuration details are handled securely to avoid data leaks or unauthorized access.

Protect sensitive information such as SAML certificates and credentials from unauthorized access and store them in a safe location.

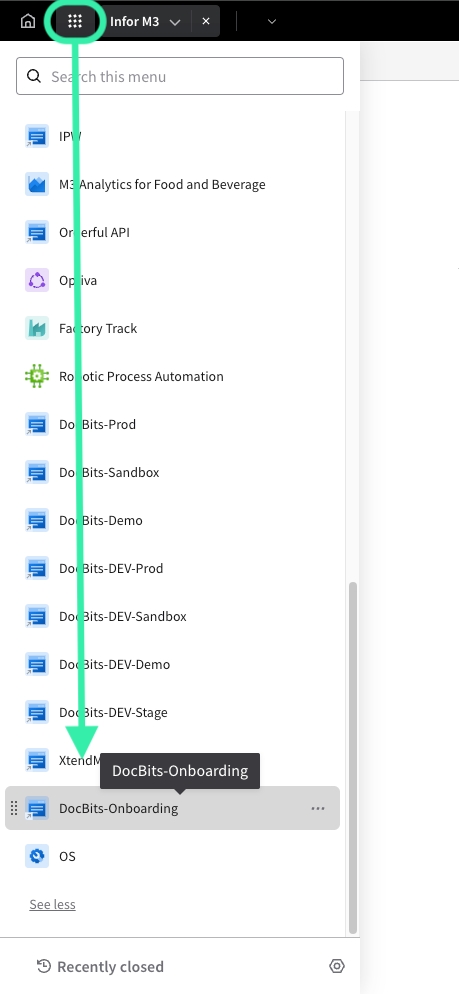

How to set up SSO with INFOR Portal V2

URL starts with https://mingle-portal.eu1.inforcloudsuite.com/<TENANT_NAME> followed by your personal extension

Choose the option Cloud Identities and use your login details

On the new Portal, the way you access this menu now is by selecting the OS option in the left menu. If you do not see it in the menu, click on See More to view all applications.

Select Security, in the OS menu, to be taken to the area for adding a new service provider. The steps are the same from this point on.

Then you need to select in the left hand side menu the option Security Administration and Service Provider.

You will see this window with the Service Providers.

Now click on the “+” sign and add our DocBits as Service Provider.

Log in on URL https://app.docbits.com/ with the login details you received from us.

Go to SETTINGS (on top bar) and select INTEGRATION, under SSO Service Provider Settings you will find all the information you need for the following steps.

Download the certificate

Filling the Service Provider with the help of SSO Service Provider Settings in DocBits

When you have filled out everything remember to save it with the disk icon above Application Type

Enter the service provider DocBits again.

Click on view the Identity Provider Information underneath.

File looks like this: ServiceProviderSAMLMetadata_10_20_2021.xml

Import the SAML METADATA in the SSO Settings.

Go to IDENTITY SERVICE PROVIDER SETTINGS, which is located under INTEGRATIONS in SETTINGS. Enter your Tenant ID (e.g. FELLOWPRO_DEV) and underneath that line you see the Upload file and the IMPORT Button, where you need to upload the previously exported SAML METADATA file.

Click on IMPORT and then choose the METADATA file that you have already downloaded from the SSO SERVICE PROVIDER SETTINGS

Click on CONFIGURE

Final Step

Log out of DocBits.

Go back to the left menu in Infor and select the application you just created.

You will be taken to the Dashboard of DocBits.

Using DocBis with your Microsoft Login without using a (separate) password

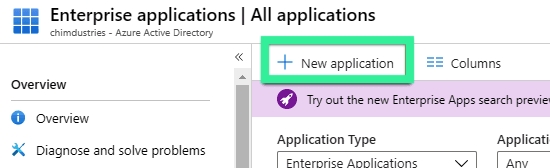

Perform the following steps to add SAML SSO in Azure AD:

In Azure, go to your `Azure Active Directory` console

In the left panel, click `Enterprise applications`

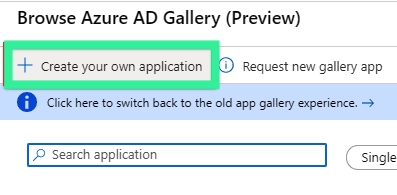

Click `+ New application

Click `+ Create your own application`

Enter a name for your application. Keep the remaining default selections.

Click on `Create`

Next, assign users or groups to the SSO configuration.

Important: You should already have created users and groups in Azure AD. If you don’t have any users or groups, create them now before proceeding.

Under `Getting Started`, click `Assign Users and Groups`.

Click `+ Add user`

Select the users and groups you want to assign to this SSO configuration. These users will be able to authenticated in DocBits (using SSO).

Click `Select`

When you’re satisfied with your selection, click `Assign`

Go to the `Groups` view list and find the assigned groups.

Next, you need to finish setting up single-sign-on in Azure.

In the left panel, click `Single sign-on`

Click `SAML`

Click `Upload metadata file`

Upload the DocBits metadata.xml, which you can find in the Settings menu Integration under SSO Service Provider Settings of your DocBits account.

Edit the `Basic SAML Configuration`

Check if the `Entity ID`, `ACS URL`, `Sign on URL` and `Logout URL` are populated right.

Download the newly generated Federation Metadata XML.

Upload the FederationMetadata.xml into the Identity Service Provider Settings of your DocBits account which you can find in the Settings menu Integration.

Click on OS in the left menu (like before), you will be taken to a menu where you need to select Portal. Next, click on + Add Application on the right. Fill in the following information, the URL field is the SSO Endpoint URL from the Integration area in your DocBits settings. A Logical ID will also be generated for you, when done click save.

Application Type

DEFAULT_SAML

Display Name

DocBits

Entity ID

See Entity ID under SSO SERVICE SETTINGS

SSO Endpoint

Copy the SSO URL from SSO SERVICE SETTINGS and paste it in the SSO Endpoint field.

SLO Endpoint

Copy the SLO URL from SSO SERVICE SETTINGS and paste it in the SSO Endpoint field.

Signing Certificate

Upload the appropriate .cer file you have downloaded in step 3c) from SSO SERVICE SETTINGS

Name ID Format and Mapping

email address

Here are troubleshooting steps for common issues while integrating DocBits with an Identity Service Provider (IdP) or other services:

Verify configuration: Make sure the integration settings in DocBits are correctly configured and match your Identity Service Provider's requirements. In particular, check the SSO URL, Entity ID, and certificates.

Monitor logs: Monitor logs in both DocBits and on the Identity Service Provider's side to identify any error messages or warnings. These logs can provide clues as to where the problem lies.

Verify network connections: Make sure there are no network issues that could affect communication between DocBits and your Identity Service Provider. Check firewall settings, DNS configurations, and network access rules.

Test SSO processes: Perform test logins via Single Sign-On (SSO) to ensure users are successfully authenticated and redirected to DocBits. Be sure to note any error messages or redirection issues.

Checking permissions: Make sure users in DocBits have the required permissions to access the appropriate features or resources. Check the assignment of groups and roles.

Updating certificates: Make sure the SAML certificates and metadata are up to date on both sides. If a certificate has expired, you must update it and retest the integration.

Communicating with support: If you cannot identify the cause of the problem, contact DocBits support or your identity service provider. They can help you troubleshoot and provide specific guidance for your setup.

By following these troubleshooting steps, you can quickly identify and resolve the most common issues during the integration between Docbits and your identity service provider.

By accurately classifying documents into specific types, they can be easily categorized and managed. This makes it easier to find and retrieve documents when they are needed.

Automated workflows:

Many document management systems, including DocBits, use document types to drive automated workflows. For example, invoices can be automatically routed for approval while contract documents are sent for signature. Correct document type mapping allows these processes to be carried out efficiently and without errors.

Rights management and security:

Different document types can be subject to different access controls and security levels. By correctly typecasting documents, it can be ensured that only authorized people have access to sensitive information.

Compliance and legal requirements:

Many industries are subject to strict legal and regulatory requirements regarding the handling of documents. Setting up document types correctly helps ensure that all necessary compliance requirements are met by handling and storing documents according to their category.

Defining specific document types:

Every type of document managed in the system should have a clearly defined document type. This includes, for example, invoices, contracts, reports, emails and technical drawings.

Attribution and metadata:

Each document type should have specific attributes and metadata that facilitate its classification and processing. For example, invoices could contain attributes such as invoice number, date and amount, while contracts have attributes such as contract parties, term and conditions.

Automation rules and workflows:

Specific rules and workflows should be defined for each document type. This can include automatic notifications, approval processes or archiving policies.

Training and user guidance:

Users should be trained to use the document types correctly and understand the importance of correct classification. This helps to minimize errors and maximize efficiency.

Regular review and adjustment:

The document types and associated processes should be regularly reviewed and adjusted as necessary to ensure they continue to meet current business needs and processes.

Setting up document types correctly is a key aspect of effectively using a document management system like DocBits. Not only does it make documents better organized and easier to find, it also enables automated processes, increases security, and ensures regulatory compliance. To fully realize the benefits, document types must be carefully defined, the corresponding processes implemented, and users trained regularly.

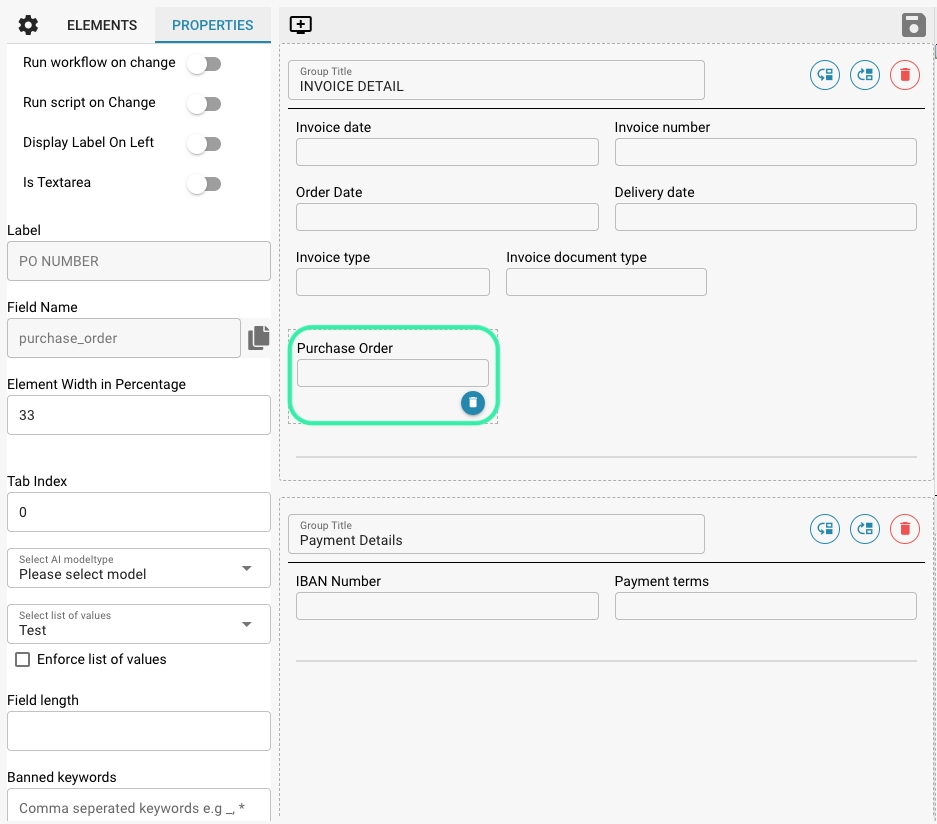

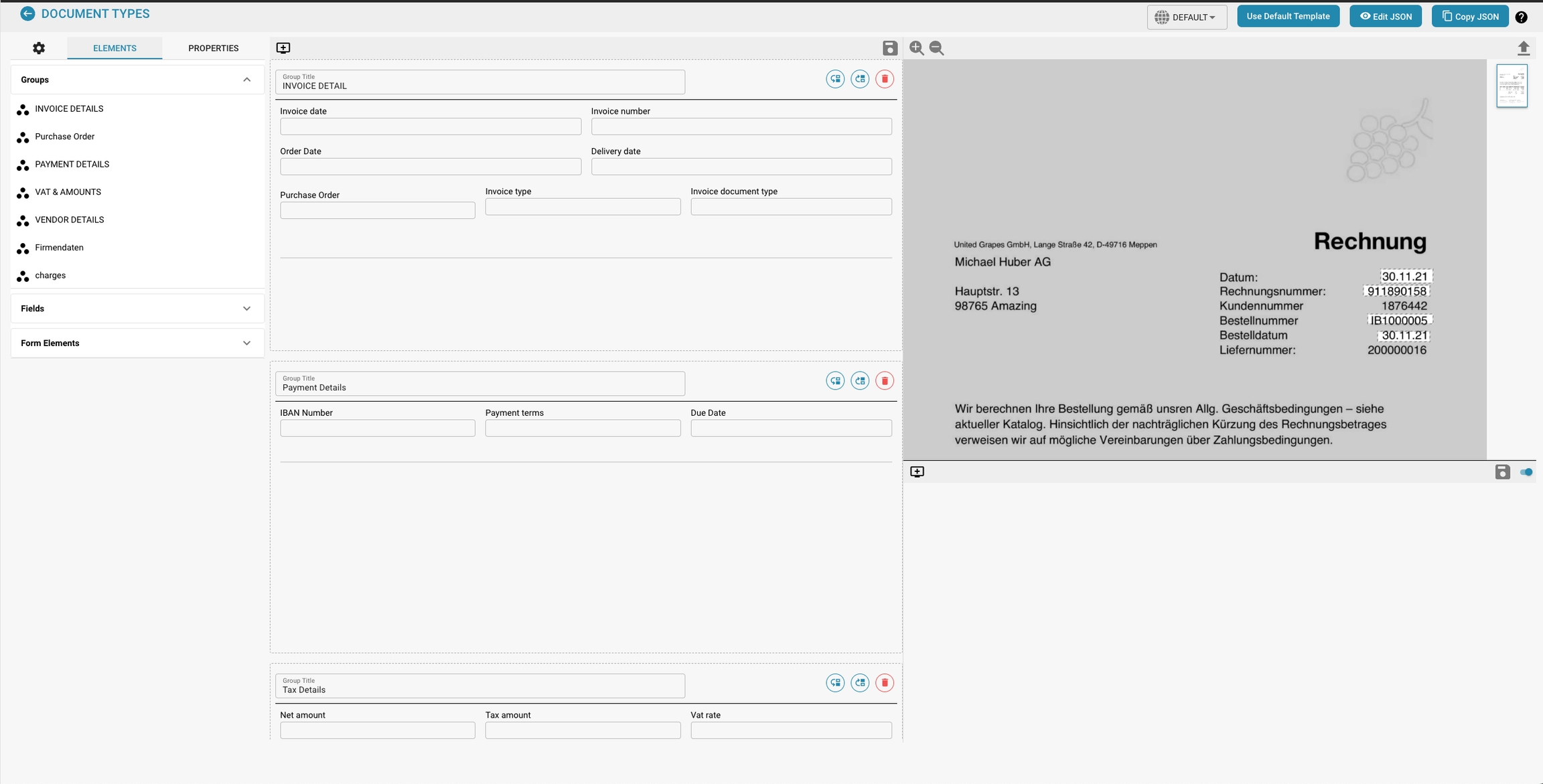

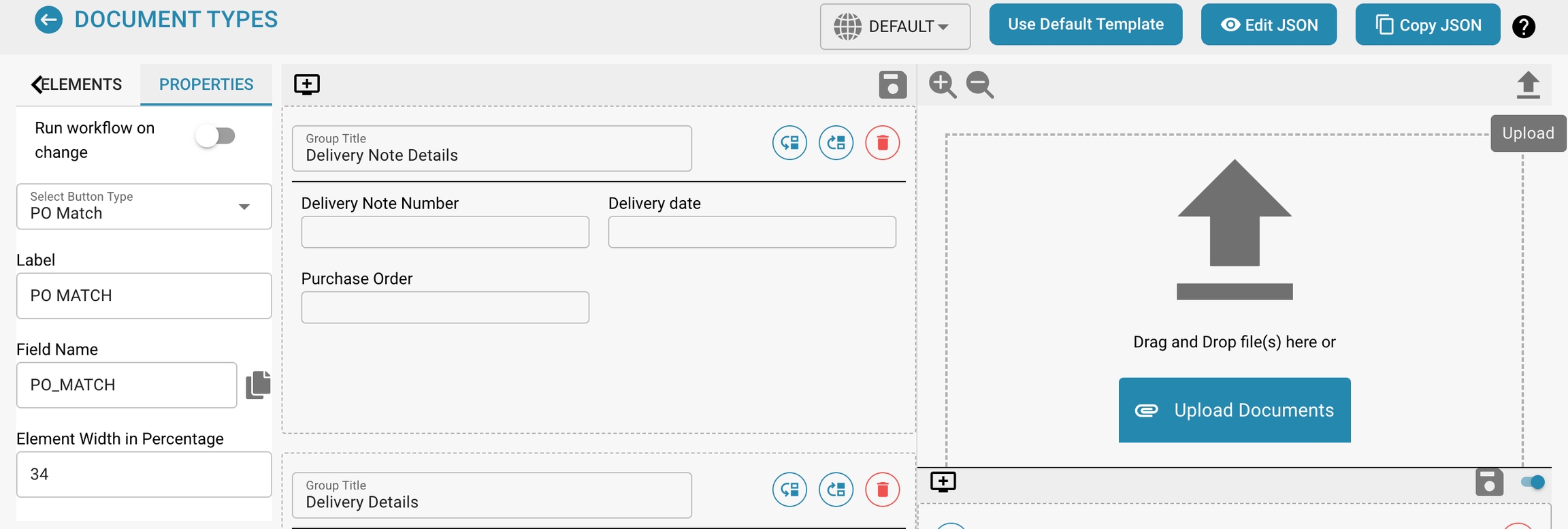

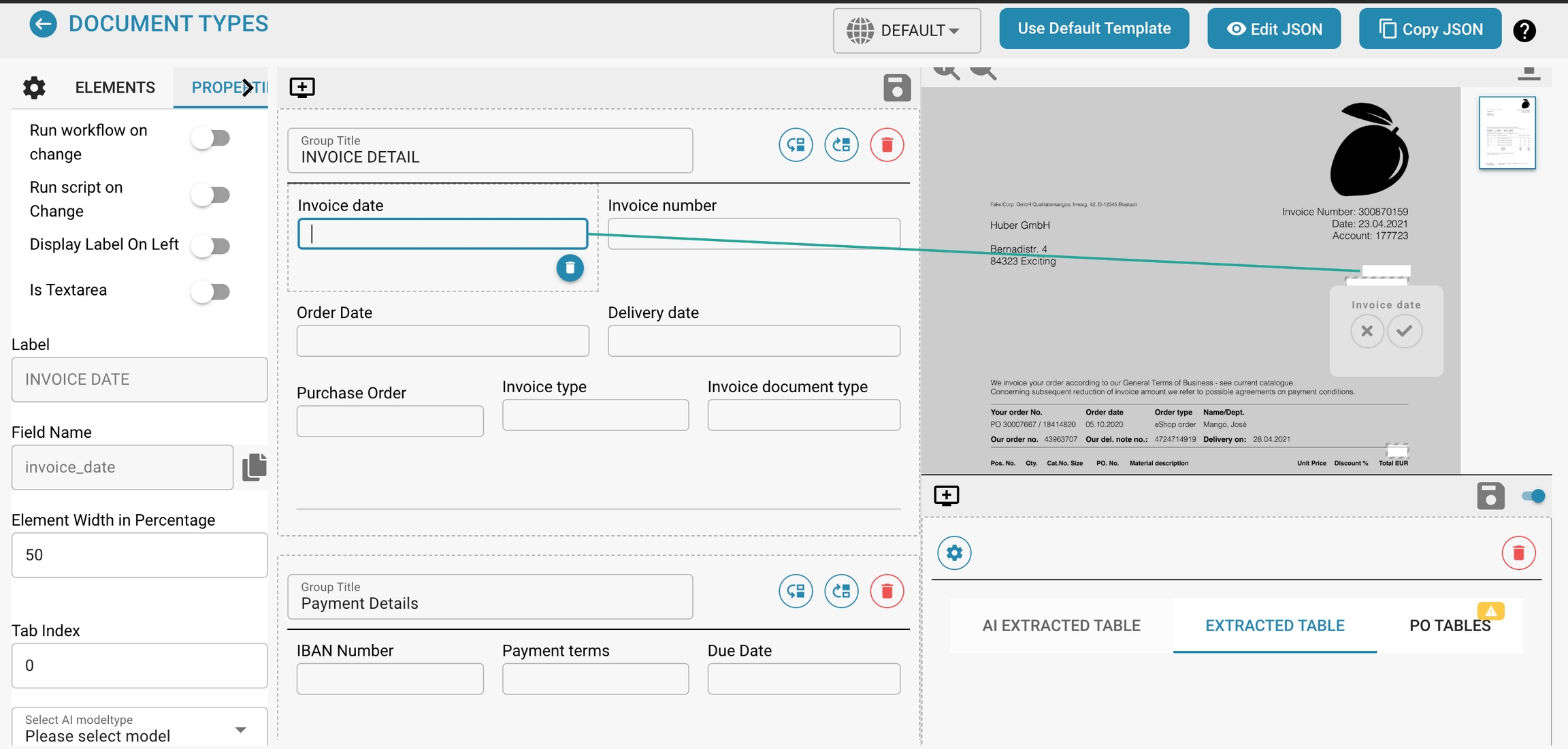

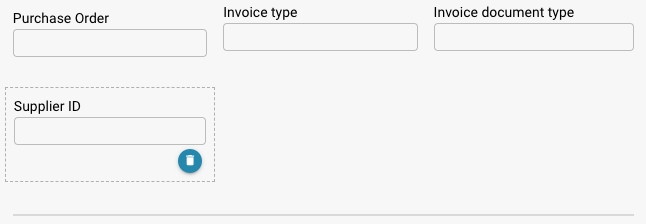

Layout Configuration Description:

The layout configuration determines the structure and appearance of a document type.

Options:

Templates:

Upload or create document templates that define the general layout.

Zones:

Specify specific areas (zones) on the document, e.g. header, footer, content area.

Impact:

Improved accuracy:

By accurately defining layouts, systems can better identify where to find certain information, improving the accuracy of data extraction.

Consistency:

Ensuring that all documents of a type have a consistent layout makes processing and review easier.

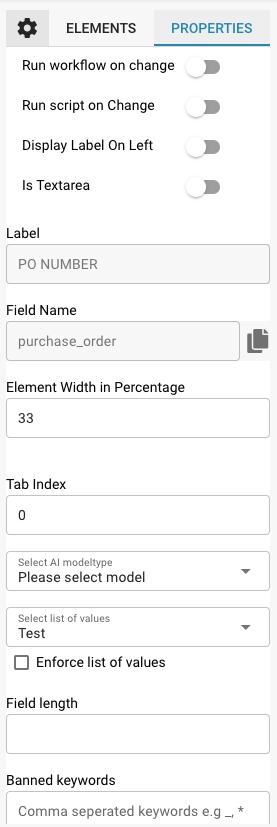

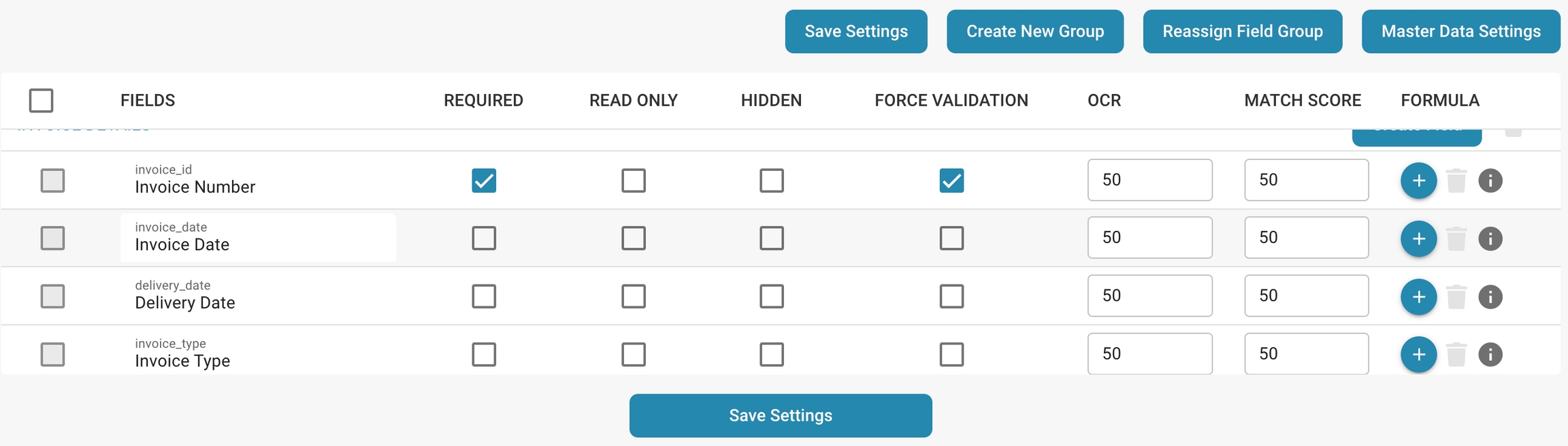

Field Definitions Description:

Fields are specific data points extracted from documents.

Options:

Field name: The name of the field (e.g. "Invoice number", "Date", "Amount").

Data type: The type of data contained in the field (e.g. text, number, date).

Format: The format of the data (e.g. date in DD/MM/YYYY format).

Required field: Indicates whether a field is mandatory.

Impact:

Data extraction accuracy:

By defining fields precisely, the correct data can be extracted precisely.

Error reduction:

Clear specification of field formats and data types reduces the likelihood of errors during data processing.

Automated validation:

Required fields and specific formats enable automatic validation of the extracted data.

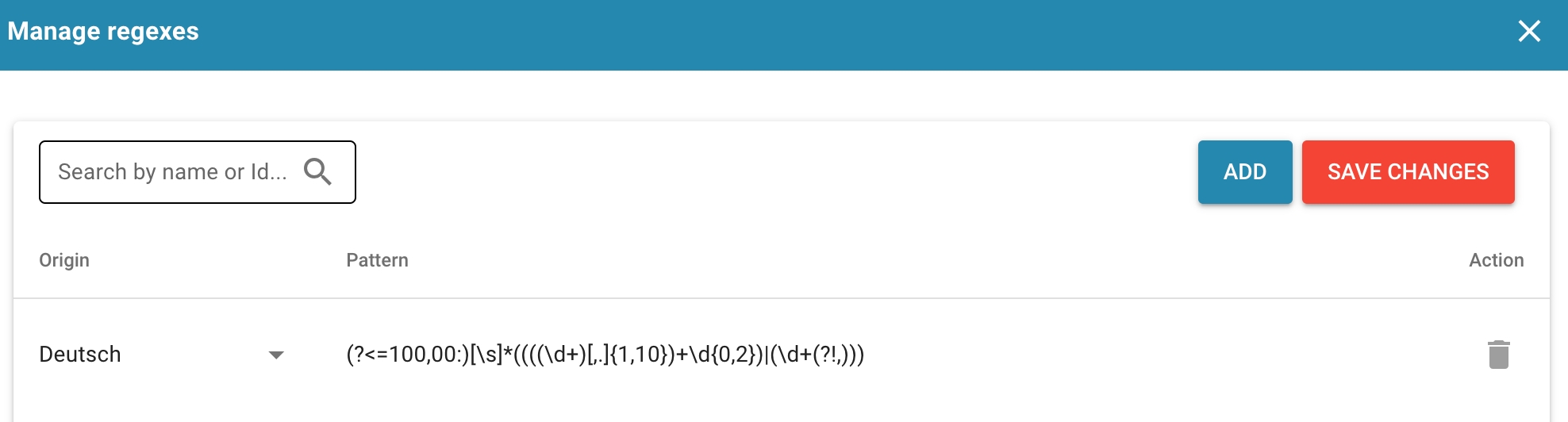

Extraction rules Description:

Rules that determine how data is extracted from documents.

Options:

Regular expressions:

Using regular expressions to match patterns.

Anchor points: .

Using specific text anchors to identify the position of fields. Artificial intelligence: Using AI models for data extraction based on pattern recognition and machine learning

Impact:

Precision:

By applying specific extraction rules, data can be extracted precisely and reliably.

Flexibility:

Customizable rules make it possible to adapt the extraction to different document layouts and contents.

Efficiency:

Automated extraction rules reduce manual effort and speed up data processing.

Validation rules Description:

Rules for checking the correctness and completeness of the extracted data.

Options:

Format check: Validating the data format (e.g. whether a date is correctly formatted).

Value check: Checking whether the extracted values are within a certain range.

Cross-check: Comparing the extracted data with other data sources or data fields in the document.

Impact:

Data quality:

Ensuring that only correct and complete data is stored.

Error prevention:

Automatic validation reduces the risk of human error.

Compliance:

Adhering to regulations and standards through accurate data validation.

Automation workflows Description:

Workflows that automate the processing steps of a document type.

Options:

Approval processes:

Automatic forwarding of documents for approval.

Notifications:

Automatic notifications for certain events (e.g. receipt of an invoice).

Archiving:

Automatic archiving of documents according to certain rules. Impact:

Increased efficiency:

Automated workflows speed up processing and reduce manual effort.

Transparency:

Clear and traceable processes increase the transparency and traceability of document processing.

Compliance:

Automated workflows ensure that all steps are carried out in accordance with internal guidelines and legal regulations.

User rights and access control Description:

Control of access to document types and their fields.

Options:

Role-based access control:

Specify which users or user groups can access certain document types.

Security levels:

Assign security levels to document types and fields.

Impact:

Data security:

Protect sensitive data through restricted access.

Compliance:

Compliance with data protection regulations through targeted access controls.

User-friendliness:

Adaptation of the user interface depending on role and authorization increases user-friendliness.

The extensive customization options for document types in DocBits enable precise control of document processing and data extraction. By carefully configuring layouts, fields, extraction and validation rules, automation workflows, and user rights, organizations can ensure that their documents are processed efficiently, accurately, and securely. These customization options go a long way in optimizing the overall performance of the document management system and meeting the specific needs of the organization.

A Layout Manager enables an orderly and structured presentation of information.

Setting placement rules for different data elements ensures that information is presented consistently and clearly.

Using a Layout Manager enables users to capture information more efficiently.

A well-designed layout results in users knowing intuitively where to enter specific data, which speeds up data capture and reduces the risk of errors.

Consistent layouts ensure consistency in documentation.

When different documents use the same Layout Manager, a consistent presentation of information across different documents is ensured.

This is especially important in environments where many different users access or collaborate on documents.

A Layout Manager enables the appearance of documents to be customized depending on requirements.

Depending on the type of document or specific requirements, layouts can be customized to better present different types of data or information.

A well-configured layout manager makes it easier to scale documents.

When new data needs to be added or requirements change, the layout manager can be customized to easily handle those changes without the need for a major redesign.

Overall, using a layout manager is critical to ensure that data is captured and organized accurately. A well-designed layout improves the user experience, promotes efficiency in data entry, and contributes to the consistency and adaptability of documents.

Configuring document types in Docbits requires care and expertise to ensure that document processing is efficient and accurate. Here are some best practices for configuring document types, including recommendations for setting up effective regex patterns and tips for training models to improve accuracy:

Best practices

Requirements analysis:

Conduct a thorough analysis of the requirements to understand which document types are needed and what information needs to be extracted from them.

Pilot projects:

Start with pilot projects to test the configuration and extraction rules before applying them to the entire system.

Best practices

Consistency:

Make sure that documents of one type have a consistent layout. This makes configuration and data extraction easier.

Use templates:

Use document templates to ensure consistency and simplify setup.

Best practices

Unique field names:

Use unique and meaningful names for fields to avoid confusion.

Relevant metadata:

Define only the fields that are really necessary to reduce complexity and increase efficiency.

Formatting guidelines:

Set clear formatting guidelines for each field to facilitate validation and extraction

Best practices

Use quality data:

Use high-quality and representative data to train the models.

Data enrichment:

Enrich the training dataset by adding different document examples to increase the robustness of the model.

Iterative training:

Train the model iteratively and evaluate the results regularly to achieve continuous improvements.

Tips:

Transfer learning:

Leverage pre-trained models and tune them with specific document examples to reduce training time and increase accuracy.

Hyperparameter tuning:

Experiment with different hyperparameters to find the optimal configuration for your model.

Best practices

Multi-step validation:

Implement multi-step validation rules to check the correctness of the extracted data.

Combine rule-based and ML-based approaches:

Use a combination of rule-based and machine learning approaches to extract and validate data.

Error management:

Set up mechanisms to detect and fix faulty extractions.

Best practices

Clearly defined workflows:

Define clear and traceable automation workflows for each document type.

Continuous monitoring:

Monitor automation workflows regularly to evaluate their performance and identify optimization potential.

Incorporate user feedback:

Integrate user feedback to continuously improve workflows.

Best practices

Role-based access:

Implement role-based access controls to ensure that only authorized users have access to certain document types and fields.

Regular review:

Regularly review access controls and adapt them to changing requirements.

Configuring document types in Docbits requires careful planning and continuous adjustment to achieve optimal results. By applying the best practices above, you can significantly increase the efficiency and accuracy of document processing and data extraction.

Check consistency:

Make sure all documents of the type have a consistent layout. Variations in layout can affect recognition.

Check zones and areas:

Check that the defined zones and areas are positioned correctly and cover the relevant information.

Update templates:

If the layout of the documents changes, update the templates accordingly.

Field names and data types:

Make sure field names are correct and data types are properly defined.

Formatting guidelines:

Check that the formatting guidelines for the fields are correct and match the actual data.

Check required fields:

Make sure all required fields are correctly recognized and filled in.

Test regex patterns:

Use a regex tool to test the patterns and make sure they capture the desired data correctly.

Increase specificity:

Adjust the regex patterns to be more specific and avoid misinterpretation.

Check anchor points:

Make sure the anchor points for data extraction are set correctly. If the pattern is not working correctly, check if special characters or different formats need to be considered.

Analyze error messages:

Examine the error messages and log files for evidence of incorrect validations.

Refine rules:

Adjust the validation rules to make them more flexible or stricter if necessary.

Multi-step validation:

Implement additional validation steps to improve data quality.

Collect representative data:

Make sure the training data covers a wide range of examples that reflect all possible variations.

Retrain models:

Retrain the models regularly, especially when new document variants are added.

Feedback loops:

Use feedback loops to continuously improve the models.

Review workflow steps:

Review each step in the workflow to ensure that the data is processed and routed correctly.

Analyze logs:

Analyze the workflow logs to identify and resolve sources of errors.

Collect user feedback:

Ask users about their experiences and issues with the workflows to identify potential weak points.

Review access rights:

Make sure the right users have access to the relevant document types and fields.

Track changes:

Check whether recent changes in access rights may have affected document processing.

Regular review:

Perform regular access rights reviews to ensure everything is configured correctly.

Consult documentation:

Use DocBits system documentation and support resources to find solutions to problems.

Provide training:

Make sure all users are adequately trained to avoid common errors.

Updates and patches:

Keep the system up to date by regularly applying updates and patches that contain bug fixes and improvements.

Troubleshooting document type configuration requires a systematic approach and careful review of all aspects of the configuration. By applying the tips above, you can identify and fix common problems to improve the accuracy and efficiency of document processing in DocBits.

Group related fields together to create a logical and intuitive structure. This makes it easier for users to navigate and enter data.

Arrange fields so that frequently used or important information is easily accessible and placed in a prominent location.

Identify all required data fields and mark them accordingly. Ensure users are prompted to enter all necessary information to avoid incomplete records.

Use validation rules to ensure that entered data conforms to expected formats and criteria.

Use clear and precise labels for fields to help users enter the expected data.

Add instructions or notes when additional information is required to ensure users provide the correct data.

Test the layout and data entry thoroughly to ensure that all data is captured and stored correctly. Collect feedback from users and make adjustments to continuously improve user experience and data integrity.

Check the configuration of the fields in the Layout Manager and make sure they match the actual fields in the scanned documents.

Check that the positions and dimensions of the fields in the layout are correct and that they cover all relevant information.

Check the validation rules and format settings for the affected fields to make sure that the expected data can be captured correctly.

Make sure that the OCR (Optical Character Recognition) or other data capture technologies are properly configured and calibrated to ensure accurate extraction of the data.

Check the validation rules for fields to make sure they are appropriate and configured correctly.

Adjust the validation rules if necessary to ensure that they meet the requirements and formats of the captured data.

Revise the layout to improve the structure and organization of fields and ensure that important information is easily accessible.

Run user testing to get feedback on the usability of the layout and make adjustments to increase efficiency.

By applying these best practices and troubleshooting as appropriate, you can create efficient and accurate document layouts that enable smooth data capture and processing.

Login Details to Cloud

Credentials are mandatory for accessing the Infor Cloud environment. The user should have the roles "Infor-SystemAdministrator" and "UserAdmin".

Config Admin Details (DocBits)

You should have received an email from FellowPro AG with the login details for the DocBits SSO Settings page. You will need a login and password.

Certificate

You can download the certificate in DocBits under SSO Service Provider Settings

How to set up SSO with INFOR Portal V1 (LN and older M3 Interface)

Login Details to Cloud

Credentials are mandatory for accessing the Infor Cloud environment. The user should have the roles "Infor-SystemAdministrator" and "UserAdmin".

Config Admin Details (DocBits)

You should have received an email from FellowPro AG with the login details for the DocBits SSO Settings page. You will need a login and password.

Certificate

You can download the certificate in DocBits under SSO Service Provider Settings

URL starts with https://mingle-portal.eu1.inforcloudsuite.com/<TENANT_NAME> followed by your personal extension

Choose the option Cloud Identities and use your login details

After login you will have access to the Infor Cloud. In this case we enter this page, but on the burger menu you will find access to all applications.

On the right hand side of the bar menu, you will find the user menu and there you can access the user management

Then you need to select in the left hand side menu the option Security Administration and Service Provider.

You will see this window with the Service Providers.

Now click on the “+” sign and add our DocBits as Service Provider.

Log in on URL https://app.docbits.com/ with the login details you received from us.

Go to SETTINGS (on top bar) and select INTEGRATION, under SSO Service Provider Settings you will find all the information you need for the following steps.

Download the certificate

Filling the Service Provider with the help of SSO Service Provider Settings in DocBits

Application Type

DEFAULT_SAML

Display Name

DocBits

Entity ID

See Entity ID under SSO SERVICE SETTINGS

SSO Endpoint

Copy the SSO URL from SSO SERVICE SETTINGS and paste it in the SSO Endpoint field.

SLO Endpoint

Copy the SLO URL from SSO SERVICE SETTINGS and paste it in the SSO Endpoint field.

Signing Certificate

Upload the appropriate .cer file you have downloaded in step 3c) from SSO SERVICE SETTINGS

Name ID Format and Mapping

email address

When you have filled out everything remember to save it with the disk icon above Application Type

Enter the service provider DocBits again.

Click on view the Identity Provider Information underneath.

File looks like this: ServiceProviderSAMLMetadata_10_20_2021.xml

Import the SAML METADATA in the SSO Settings.

Go to IDENTITY SERVICE PROVIDER SETTINGS, which is located under INTEGRATIONS in SETTINGS. Enter your Tenant ID (e.g. FELLOWPRO_DEV) and underneath that line you see the Upload file and the IMPORT Button, where you need to upload the previously exported SAML METADATA file.

Click on IMPORT and then choose the METADATA file that you have already downloaded from the SSO SERVICE PROVIDER SETTINGS

Click on CONFIGURE

Go to Admin settings

Click on ADD APPLICATION in the top right corner

Fill out all fields like on the following image but with your own SSO Url, don’t forget to choose an icon and click on SAVE.

Final Step

Log out of DocBits.

Go back to the burger menu in Infor and select the icon you just created.

And you will be taken to the Dashboard of DocBits.

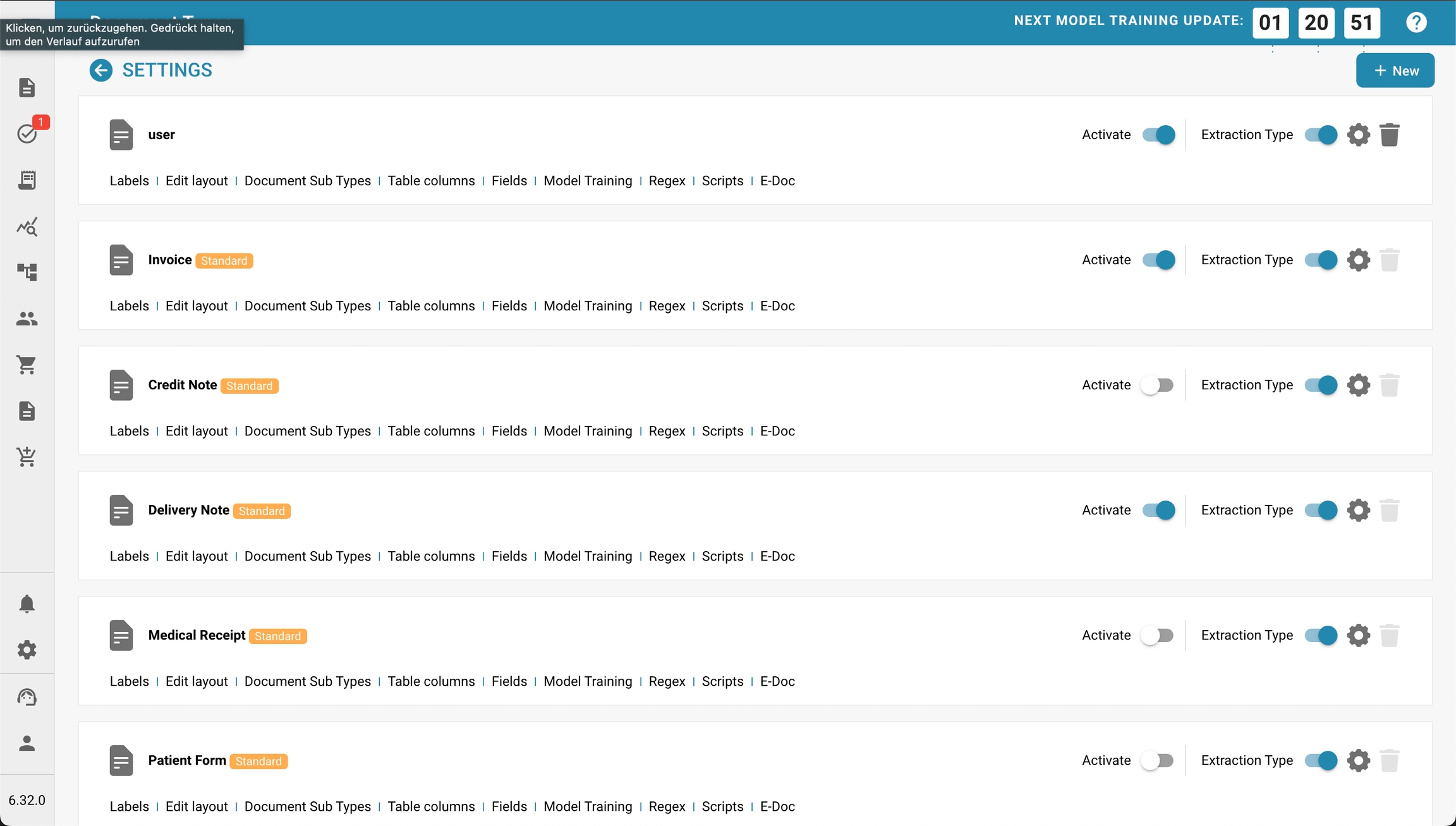

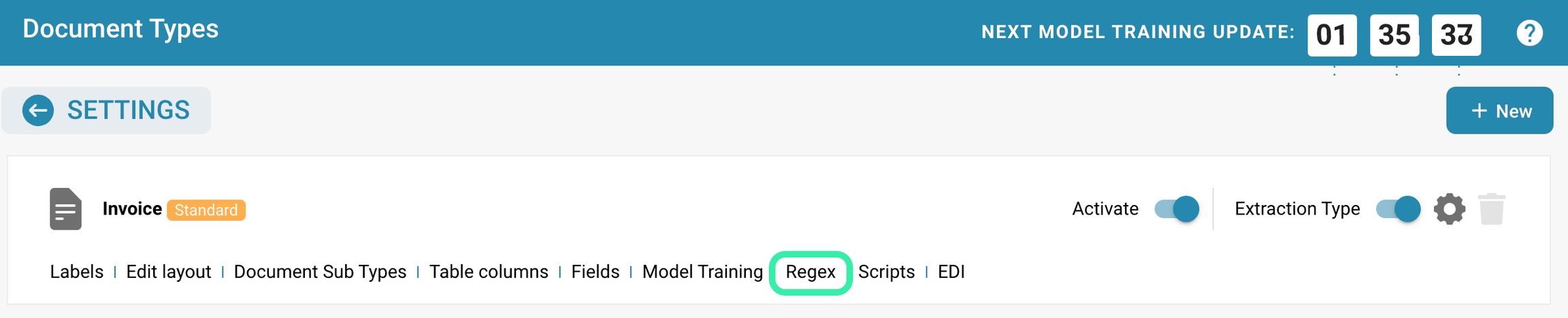

De sectie Documenttypes vermeldt alle documenttypes die door Docbits worden herkend en verwerkt. Beheerders kunnen verschillende aspecten beheren, zoals lay-out, velddefinities, extractieregels en meer voor elk type document. Deze aanpassing is essentieel voor een nauwkeurige gegevensverwerking en naleving van de organisatorische normen.

Documenttype Lijst:

Elke rij vertegenwoordigt een documenttype zoals Factuur, Creditnota, Leveringsbon, enz.

Documenttypes kunnen standaard of op maat zijn, zoals aangegeven door labels zoals "Standaard."

Lay-out bewerken: Deze optie stelt beheerders in staat om de instellingen voor de documentlay-out te wijzigen, waaronder het definiëren van hoe het document eruitziet en waar de gegevensvelden zich bevinden.

Document Subtypes: Als er documenttypes zijn met subcategorieën, kan deze optie beheerders in staat stellen om instellingen specifiek voor elk subtype te configureren.

Tabelkolommen: Pas aan welke datakolommen moeten verschijnen wanneer het documenttype in lijsten of rapporten wordt bekeken.

Velden: Beheer de gegevensvelden die aan het documenttype zijn gekoppeld, inclusief het toevoegen van nieuwe velden of het wijzigen van bestaande.

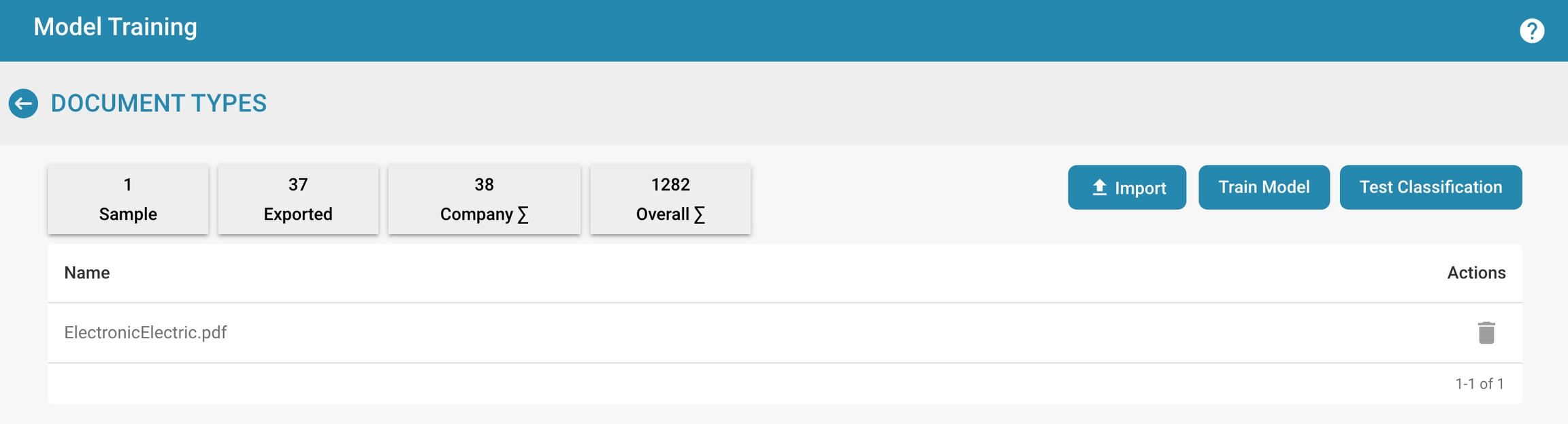

Modeltraining: Configureer en train het model dat wordt gebruikt voor het herkennen en extraheren van gegevens uit de documenten. Dit kan inhouden dat parameters worden ingesteld voor machine learning-modellen die in de loop van de tijd verbeteren met meer gegevens.

Regex: Stel reguliere expressies in die worden gebruikt om gegevens uit documenten te extraheren op basis van patronen. Dit is bijzonder nuttig voor gestructureerde gegevensextractie.

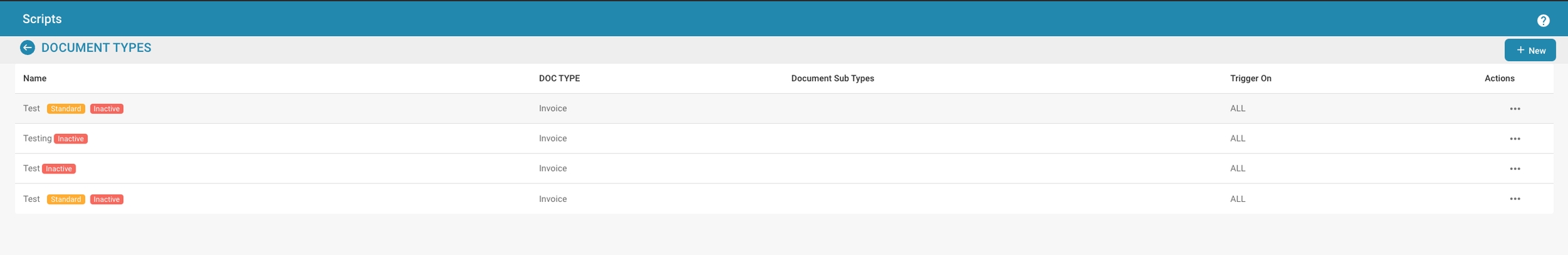

Scripts: Schrijf of wijzig scripts die aangepaste verwerkingsregels of workflows voor documenten van dit type uitvoeren.

E-DOC: Configureer instellingen met betrekking tot de uitwisseling van documenten in gestandaardiseerde elektronische formaten. U kunt XRechnung, EDI, FakturaPA of EDI configureren.

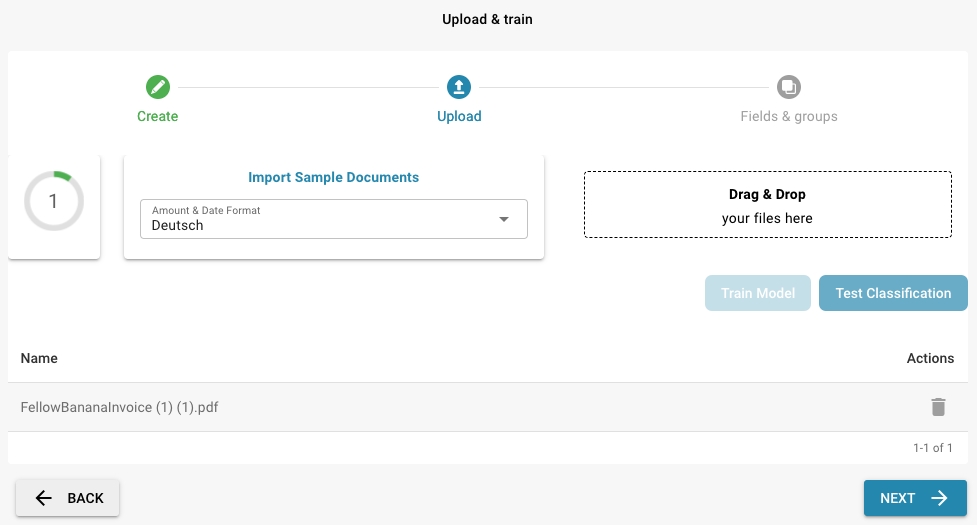

Log in: Log in to DocBits with your administrator rights.

Navigate: Go to Settings.

Document Types: Find the "Document Types" section.

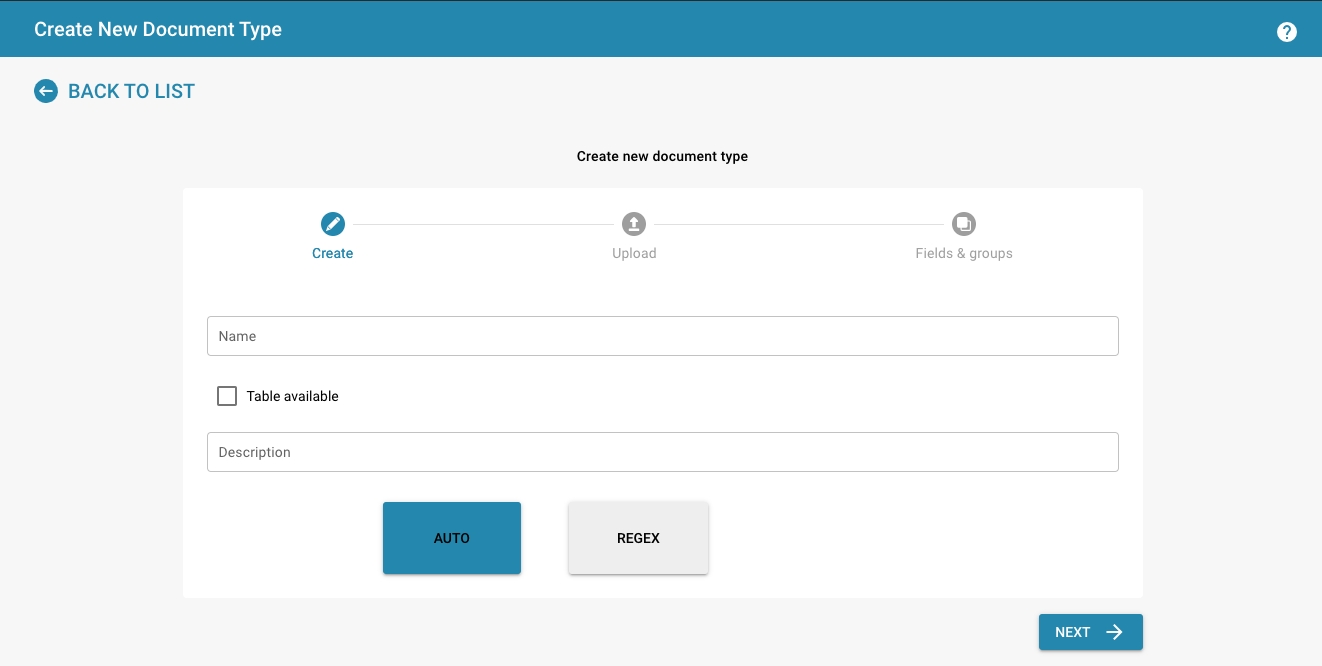

Create a new document type:

Click the "+ New" button.

Basic information:

Enter a name for the new document type (e.g. "Invoice", "Contract", "Report").

Add a description explaining the purpose and use of the document type.

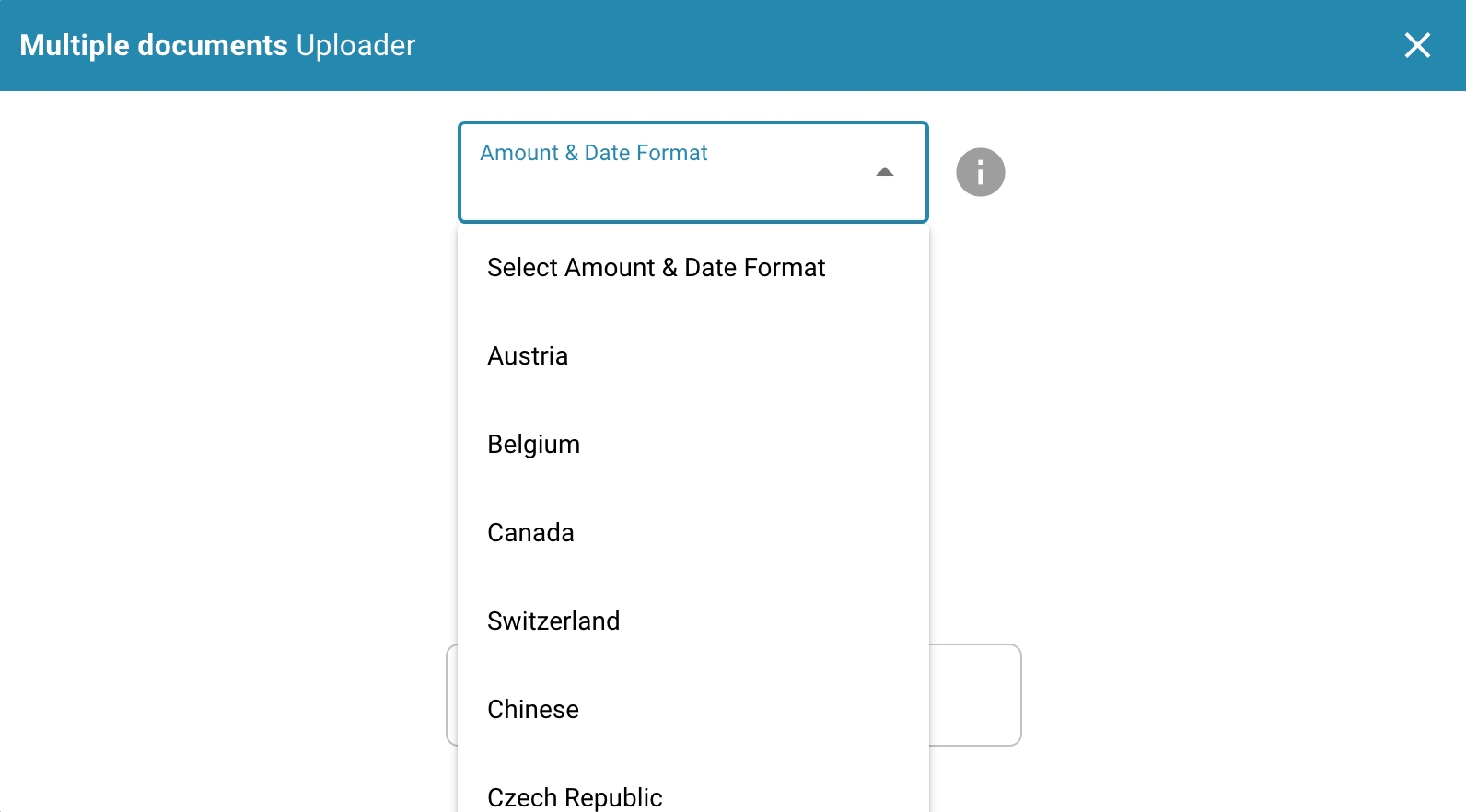

Amount and date format

Enter the format for the amount and date

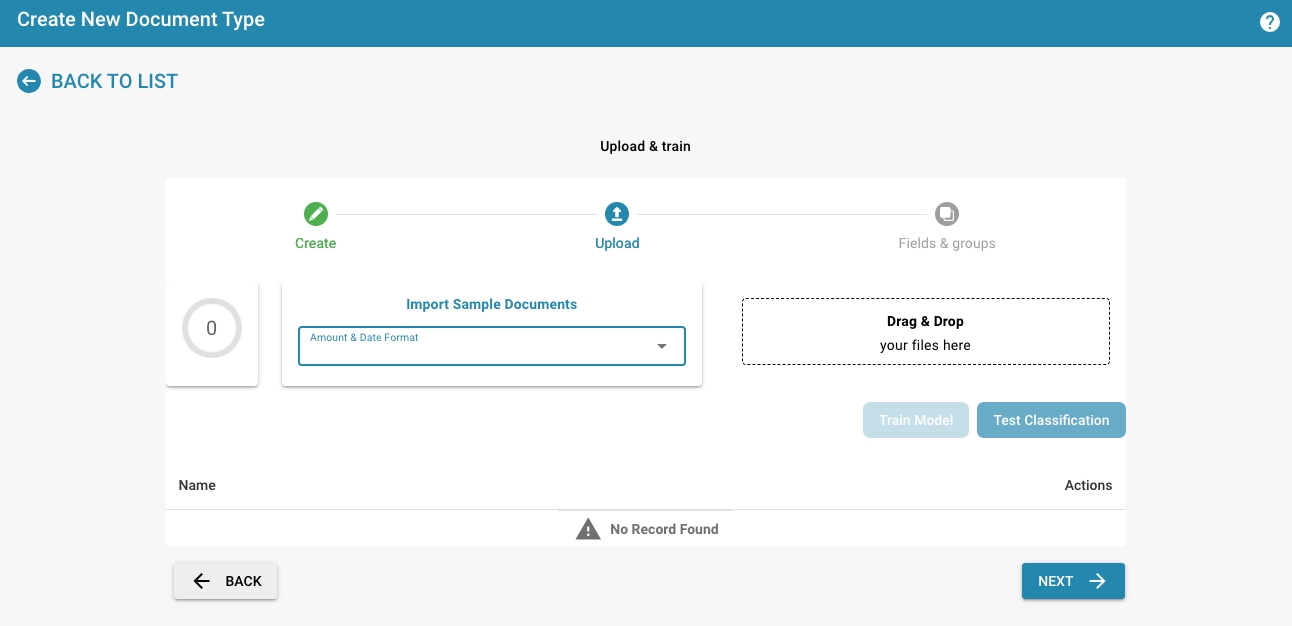

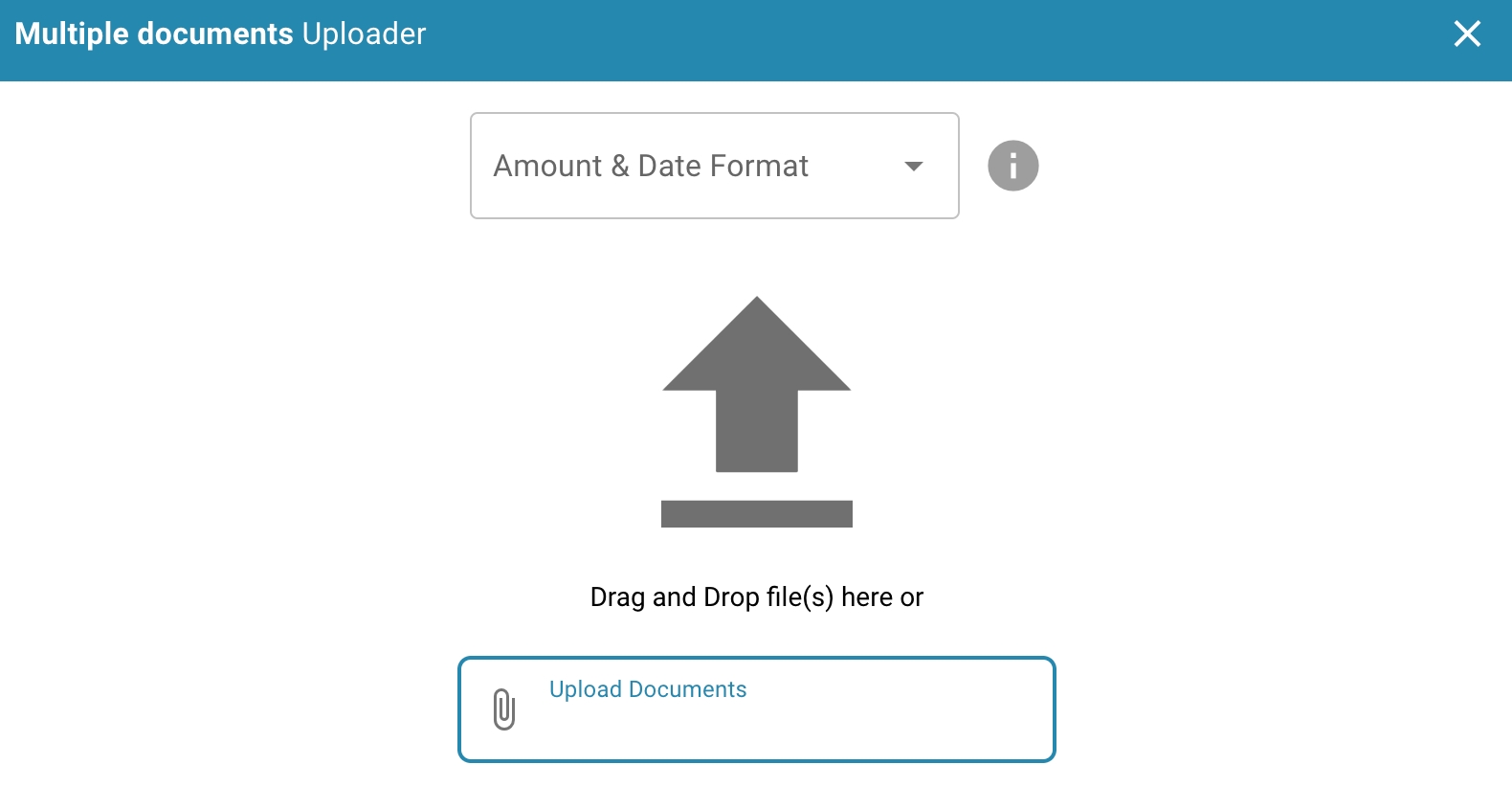

Import Sample Documents

Upload sample documents via drag & drop

At least 10 documents must be uploaded for the training

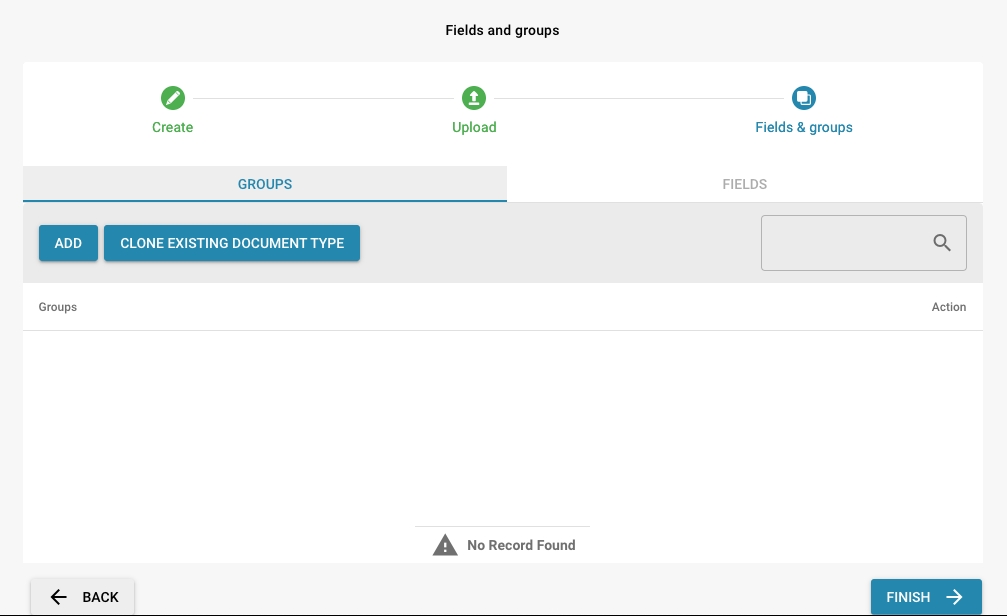

Add Groups

Click the "Add" button and enter the group name.

You can also clone an existing document type.

Add fields:

Add new fields by clicking "Add".

Enter the name of the field (e.g. "Invoice number", "Date", "Amount") and the data type (e.g. Text, Number, Date).

Finish

Once all the details are entered, click "Finish" and the new document type is created

Select a document type:

Select the document type you want to edit from the list of existing document types.

Under the document type you will find various editing options, for example editing the layout, fields, table columns, etc.

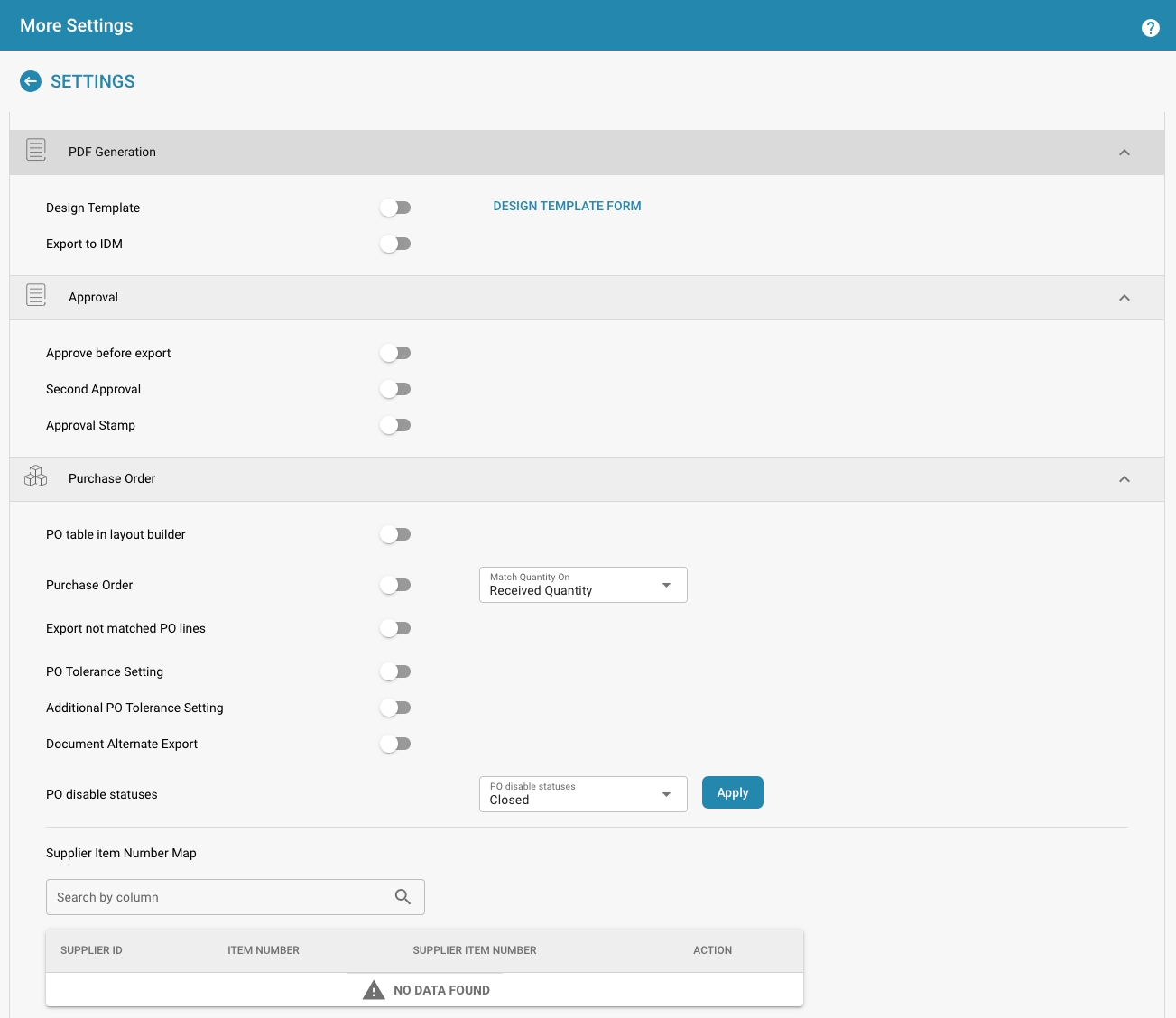

More Settings:

Click the Edit button next to the document type.

Here you can make further settings for the document type, such as design template, whether a document must be approved before export and many other details.

Define rules:

Go to the Extraction Rules section.

Create rules that specify how to extract data from documents. This may include using regular expressions or other pattern recognition techniques.

Test rules:

Test the extraction rules with sample documents to ensure that the data is correctly recognized and extracted.

Fine-tuning:

Adjust the extraction rules based on the test results to improve accuracy and efficiency.

Inform users:

Inform users of the new or changed document type and provide training if necessary.

Documentation:

Update system documentation to describe the new or changed document types and their usage. By carefully setting up and managing document types in DocBits, you can ensure that documents are correctly classified and processed efficiently. This improves the overall performance of the document management system and contributes to the accuracy and productivity of your organization.

Log in: Log in to DocBits with administrator rights.

Navigate: Go to Settings.

Document Types: Find the Document Types section.

Access Document Types List

Access the list of existing document types. This list shows all defined document types, both active and inactive.

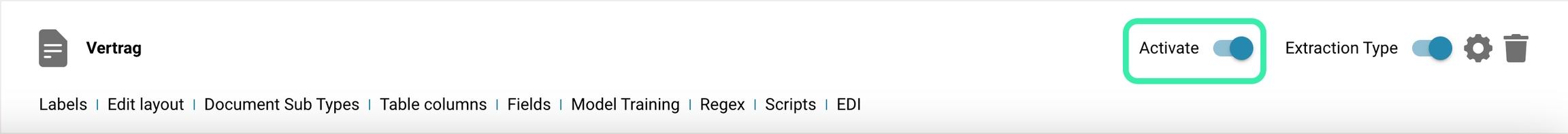

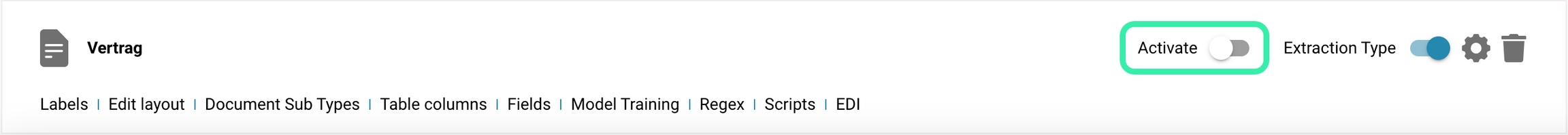

Activating or deactivating a document type Select Document Type:

Select the document type you want to enable or disable.

Use the toggle function:

In the user interface, there is a toggle switch next to each document type that allows activation and deactivation.

Activation:

If the document type is currently deactivated, the switch may show a gray or off position.

Click the switch to activate the document type. The switch changes its position and color to indicate activation.

Deactivation:

If the document type is currently activated, the switch shows a colored or on position.

Click the switch to deactivate the document type. The switch changes its position and color to indicate deactivation.

Save:

Make sure all changes are saved. Some systems save changes automatically, while others require explicit confirmation.

Inform users:

Inform users about the activation or deactivation of the document type, especially if it impacts their work processes.

Update documentation:

Update system documentation to reflect the current status of document types.

Conclusion The ability to enable or disable document types depending on the organization's needs is a useful tool for managing document processing in Docbits. By simply using the toggle function in the user interface, administrators can react flexibly and efficiently and ensure that the system is optimally aligned with current business needs.